- Details

-

Published: Friday, 14 June 2019 16:13

-

Written by Riccardo Gallazzi

News from the AWS world

As every month, the world of Amazon Web Services gets new products and features. This month we'd like to inform you about a new class of S3 Storage - Glacier Deep Archive - designed for storing large amounts of data similar to the "usual" Glacier but at 75% of the price and available within 12 hours; the Single Sign-On service expands its coverage to new regions; Open Distro for Elasticsearch, as the name suggests, combines Elsticsearch, a distributed and document-oriented search and analysis engine used for real-time log analysis and monitoring and Kibana, an advanced data visualization system, making them available in the form of RPM packages and Docker containers (it is not a fork of the two projects: the contributions are pushed on the upstream channel); RedShift now includes an automatic concurrence scaling function in the event of activity bursts; Direct Connect is updated with a new console that allows you to have services in different Regions (excluding China) and access them from a single point; the SNS notification service now includes 5 new languages, including Italian; regarding containers, we point out that EKS (Elastic Container Services for Kubernetes) supports Windows Server workloads, although in tech preview mode. The new Deep Learning Containers are containers with pre-installed applications for programmed learning activities (TensorFlow, Apache MXNet and PyTorch ).

Read more ...

- Details

-

Published: Thursday, 02 May 2019 16:32

-

Written by Guru Advisor

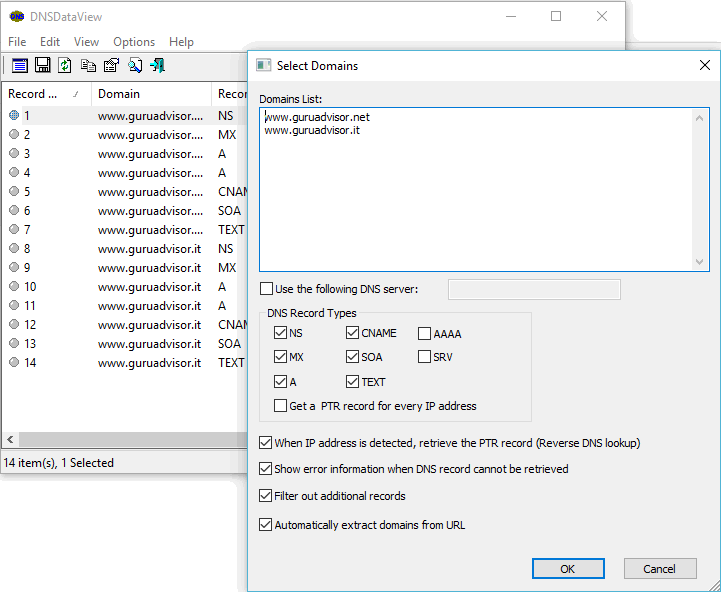

Intro article -> An introduction to GitLab

GitLab’s homepage is as follows, with a toolbar in the upper part and an activity box on the left (referred to the actual repository) with access to branches. Clicking Commits shows the related chronology in addition to the state of branches with the homonymous section. The commit feature is used to individuate users and activities on files (addition, modification, deletion).

GitLab: creating a repository

You must register at gitlab.com and create an account in order to use GitLab; then you’ll be redirected to the main projects page, which can be reached by the Projects item in the toolbar. The best way to start a project is to create a group to define users, permissions and repos, then hit Create a Project.

This is when you can decide wether to start a project from scratch, from a template or to import it. In the Visibility Level section you can define the access to the project: private, internal (whoever is logged in gitlab.com) or public. The latter is the case where anybody can partecipate in, while Internal is the ideal solution when GitLab in installed on an on-premises platform.

The main management window has the commands to start working with a plain project.

Read more ...

- Details

-

Published: Thursday, 13 December 2018 15:47

-

Written by Veronica Morlacchi

La data protection by design è uno dei criteri fondamentali indicati dall’ormai noto GDPR che un titolare di un trattamento di dati personali deve rispettare, sia al momento di determinare i mezzi di quel trattamento sia all’atto del trattamento stesso, nell’adempimento del suo dovere di responsabilizzazione (“accountability”). Anche la tecnologia deve essere progettata per operare nel rispetto della privacy by design, e, dunque, nel rispetto dei diritti fondamentali delle persone fisiche i cui dati vengono trattati.

La c.d. privacy by design, ovvero, protezione dei dati fin dalla progettazione, è uno dei capisaldi del GDPR e fa riferimento all’approccio da utilizzare, nel momento in cui viene pensato un trattamento di dati personali e prima ancora che venga iniziato, ovvero alle modalità tecniche ed organizzative da adottare nell’organizzazione di quel trattamento di “dati personali” - che, si ricorda incidentalmente, sono definiti ex art.4, n.1 come “qualsiasi informazione riguardante una persona fisica identificata o identificabile («interessato»)”.

Read more ...

- Details

-

Published: Wednesday, 11 July 2018 10:56

-

Written by Riccardo Gallazzi

New versions of Nextcloud are available

Nextcloud is updated by releasing versions 13.0.3 and 12.0.8.

The improvements introduced by the new version include: support to host databases through IPv6, OAuth compatibility, better log system and prohibition management, better management of errors in Workflow and addition of links to privacy policy, always in a GDPR perspective as described in the GDPR Compliance Kit. The changelogs are available at this address.

The iOS client was also updated, which introduces total support with Apple mobile devices.

Please note that version 11 no longer has active support.

Read more ...

- Details

-

Published: Thursday, 05 July 2018 10:25

-

Written by Riccardo Gallazzi

Previous article -> An introduction to Docker pt.2

The Docker introduction series continues with a new article dedicated to two fundamental elements of a containers ecosystem: volumes and connectivity.

That is, how to let two containers communicate with each others and how to manage data on a certain folder on the host.

Storage: volumes and bind-mounts

Files created within a container are stored on a layer that can be written by the container itself with some significant consequences:

- data don’t survive a reboot or the destruction of the container.

- data can hardly be brought outside the container if used by processes.

- the aforementioned layer is strictly tied to the host where the container runs, and it can’t be moved between hosts.

- this layer requires a dedicated driver which as an impact on performances.

Docker addresses these problems by allowing containers to perform I/O operations directly on the host with volumes and bind-mounts.

Read more ...

- Details

-

Published: Tuesday, 03 July 2018 14:04

-

Written by Lorenzo Bedin

In this article we will focus on services specifically dedicated to Cloud Server instances by analyzing not just performances and hardware characteristics (a topic partially covered in a previous column on GURU advisor), rather by analyzing services and features.

We have taken into consideration the main operators of the sector and compared some service macro-areas, then going into details of each: from options included with no additional costs to instance customization and available logs and alerts. The article will cover the main and most requested aspects, while tables will contain detailed information for a complete comparison.

The products

The families of products considered are two: Cloud Servers used mainly for Web applications and with monthly invoice, and more specific, powerful and effective-time invoiced instances. Amazon LightSail and DigitalOcean and RamNode dropets fall in the first category, while the second has number in the like of Google Compute Engine, Amazon EC2, Azure and OVH instances, Aruba Cloud Pro and Stellar by CoreTech.

Read more ...

- Details

-

Published: Thursday, 12 April 2018 18:00

-

Written by Riccardo Gallazzi

Kontena joins the Open Container Initiative

Kontena joins the Open Container Initiative (OCI).

Kontena is an open-source solution that allows you to manage containers in production, in a manner similar to Kubernetes.

It includes capabilities of high-availability, affinity rules, health check, orchestration, on-demand volume creation, multi-host networking, multicast and hybrid cloud support, VPN access, Let's Encrypt secrets and certificates, role-based access control, load balancing, SSL termination, automatic scaling, support for Docker Compose, service discovery, monitoring and logging with support for FluentD and StatsD, CLI, shells and WebUI and stack and image register.

The Open Container Initiative is a project of the Linux Foundation launched in 2015 by Docker, CoreOS and other leaders in the container sector that aims to create open standards regarding container format and runtime. It includes members such as Docker, CoreOS, VMware, Red Hat, SUSE, Oracle, OpenStack, Intel, Mesosphere, Google, AWS and, indeed, Kontena.

Google Cloud: 96 vCPU instances and support for Windows containers

Google Cloud announces new instances with 96 vCPUs.

Instances can have up to 624GB of RAM and Intel Xeon Scalable Processors (Skylake) with the AVX-512 instruction set and are available in the us-central1, northamerica-northeast1, us-east1, us-west1, europe-west1, europe-west4, and asia-east1, asia-south1 and asia-southeast1.

Furthermore, support for Windows containers (available via Docker) is announced by means of optimized VM images.

Read more ...

- Details

-

Published: Wednesday, 11 April 2018 14:36

-

Written by Veronica Morlacchi

Data portability in the new European Regulation 2016/679

A new civic duty for personal data controllers and a new right for data subjects: let’s see the content, the legal basis and the actual realization.

Why should one be interested in data portability and understand what it means?

The date of the 25 May 2018 comes closer. That day the GDPR will come into effect in all EU Countries. There are several news introduced by the new regulation that must be understood, regardless of being the physical person personal data refers to (as new rights are gained), or being the controller of data being received and processed (as new duties are gained). One of the main new features it the so-called “right to data portability” which is outlined by Article 20 and “Whereas” 68 and 73 of the GDPR, and illustrated by the Guidelines WP 242 adopted on 13 December 2016 (and last revised on 5 April 2017), the so-called document WP 242, written by the European Working Party “WP 29”.

The text of the GDPR can be accessed here, while the WP 242 document can be accessed here.

Read more ...

- Details

-

Published: Wednesday, 11 April 2018 14:29

-

Written by Riccardo Gallazzi

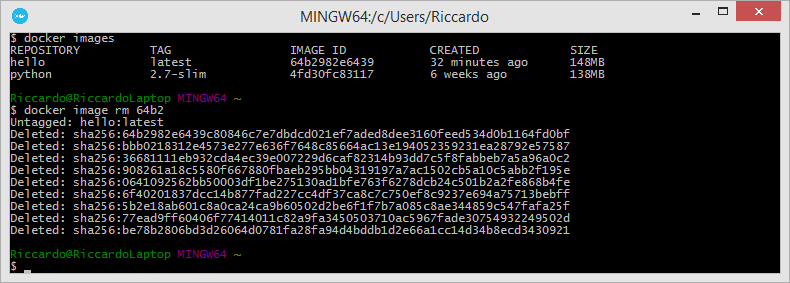

Previous article -> Introduction to Docker - pt.1

Images and Containers

An image is an ordered set of root filesystem updates and related execution parameters to be used in a container runtime; it has no state and is immutable.

A typical image has a limited size, doesn’t require any external dependency and includes all runtimes, libraries, environmental variables, configuration files, scripts and everything needed to run the application.

A container is the runtime instance of an image, that is, what the image actually is in memory when is run. Generally a container is completely independent from the underlying host, but an access to files and networks can be set in order to permit a communication with other containers or the host.

Conceptually, an image is the general idea, a container is the actual realization of that idea. One of the points of strength of Docker is the capability of creating minimal, light and complete images that can be ported on different operating systems and platforms: the execution of the related container will always be feasible and possible thus avoiding any problem related to the compatibility of packages, dependencies, libraries, and so forth. What the container needs is already included in the image, and the image is always portable indeed.

Read more ...

- Details

-

Published: Monday, 29 January 2018 12:21

-

Written by Riccardo Gallazzi

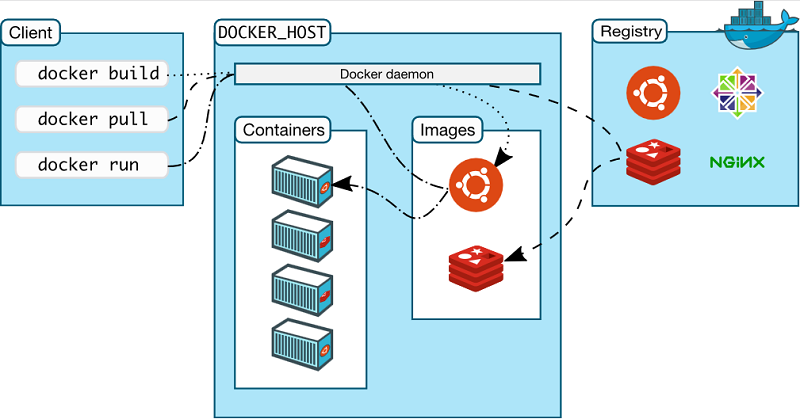

You have heard about it for sure, it’s one of the hottest technologies of the moment and it’s gaining momentum quickly: the numbers illustrated at DockerConf 2017 are about 14 million Docker hosts, 900 thousands apps, 3300 project contributors, 170 thousands community members and 12 billion images downloaded.

In this series of articles we’d like to introduce the basic concepts in Docker, so to have solid basis before exploring the ample related ecosystem.

The Docker project was born as an internal dotCloud project, a PaaS company, and based on the LXC container runtime. It was introduced to the world in 2013 with an historic demo at PyCon, then released as an open-source project. The following year the support to LXC ceased as its development was slow and not at pace with Docker; Docker started to develop libcontainer (then runc), completely written in Go, with better performances and an improved security level and degree of isolation (between containers). Then it has been a crescendo of sponsorships, investments and general interest that elevated Docker to a de-facto standard.

It’s part of the Open Container Project Foundation, a foundation of the Linux Foundation that regulates the open standards of the container world and includes members like AT&T, AWS, DELL EMC, Cisco, IBM Intel and the likes.

Docker is based on a client-server architecture; the client communicates with the dockerd daemon which generates, runs and distributes containers. They can run on the same host or on different systems, in this case the client communicates with the daemon by means of REST APIs, Unix socket or network interface. A registry contains images; Docker Hub is a public Cloud registry, Docker Registry is a private, on-premises registry.

Read more ...

- Details

-

Published: Monday, 23 October 2017 11:41

-

Written by Veronica Morlacchi

What are the most relevant juridical implications derive from the use of IoT devices, in particular in terms of personal data? What are the profiles that must be kept into account when developing IoT solutions?

This magazine has described the Internet of Things in the “Word of the Day” column and in last issues we had an article dedicated to the protection of IoT devices.

The interest on the topic is easily justified: a recent study by Aruba Networks, “The Internet of Things: Today and Tomorrow”, highlighted that the economics advantages of a business due to the adoption of IoT devices appear to exceed the expectations, so we can forecast a boom of the trend in the near future, in particular in sectors like industrial, health, retail, “wearable computing” (ie wearable devices like glasses, dresses, watches, etc.. connected to the Network), Public Administration, domotics and where companies create a “smart workplace”.

Therefore, as a consequence of the ample variety of sectors and the general interest on the topic, a lot of complications and implications might arise in terms from the use of IoT devices, in so as far legal aspects are concerned.

Read more ...

- Details

-

Published: Monday, 23 October 2017 11:37

-

Written by Riccardo Gallazzi

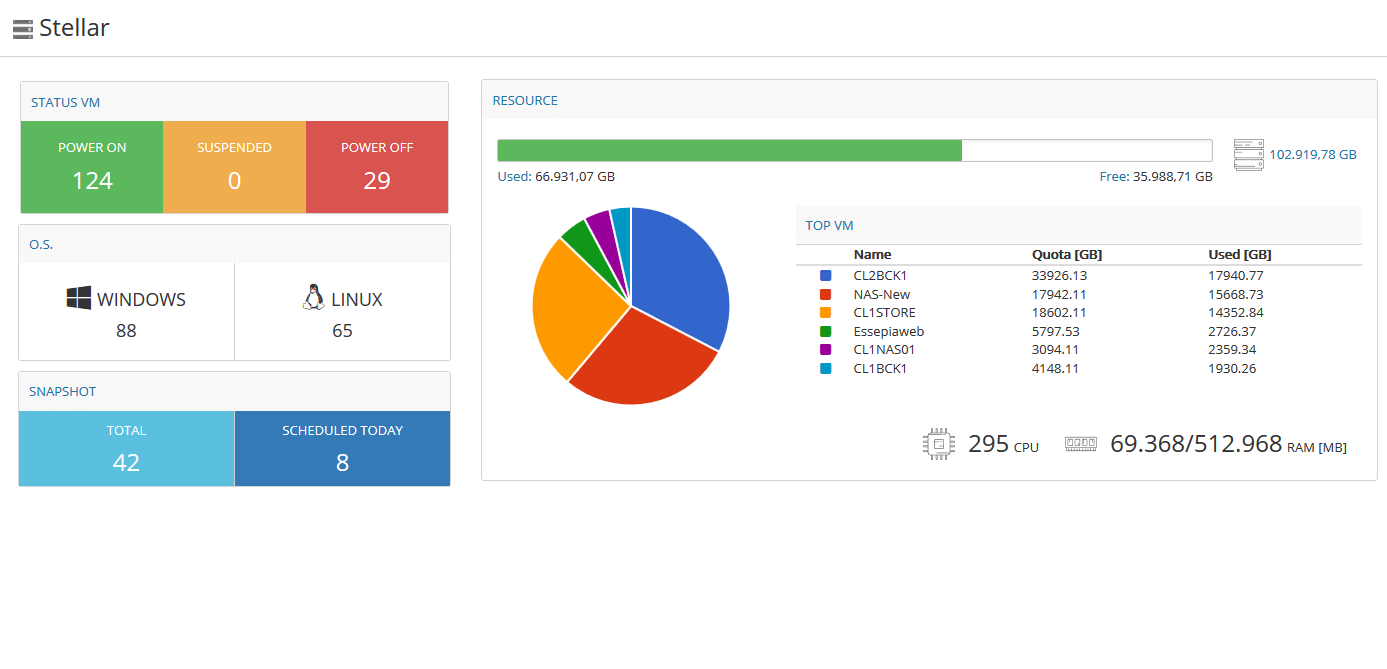

Most companies with IT infrastructures now relies on cloud, public or hybrid cloud systems: Stellar offers Cloud Servers aimed to MSP, EDP Manager, sysadmin, Web Agencies, Software Houses and IT Pros seeking for a reliable and performant cloud system.

Stellar’s cloud servers are best suited to production environments that require, in addition to high performances, a guaranteed service continuity like email and authentication servers, file sharing, remote desktop, database, application software (ERP, CRM, MRP, etc..) and in general all services which rely on virtualization.

A dedicated infrastructure

Stellar relies on two giants of the sector: VMware vSphere and HPE. Servers are grouped into micro-clusters, called Small Cloud Clusters, which are composed by three hosts each. Servers are HP DL360 with double Intel Xeon E5 v3 processor, 192GB of RAM , double 1-GBit 8-port network card and redundant power supply.

Read more ...

- Details

-

Published: Wednesday, 26 July 2017 15:06

-

Written by Riccardo Gallazzi

The “Magic Quadrant” report for the Cloud IaaS market by Gartner is out

Gartner, the leading company in consultancy, research and analysis in the IT field, published the annual “Magic Quadrant” report for the Cloud IaaS market, which is available at this address.

Among the most interesting result we cite the first appearance of two players like Alibaba Cloud (“the vendor's potential [is] to become an alternative to the global hyperscale cloud providers in select regions over time”) and Oracle Cloud, and the disappearance of VMware vCloud Air. AWS maintains its leadership in the first quadrant, followed by Microsoft Azure: both players are the only ones to be in the “Leaders and Visionaries” quadrant, and share most of the market.

Overall, the Cloud IaaS market is described as fragmented and in a reboot phase (many providers are changing their offers and infrastructures) and, given the gap between competitors still keep on growing, some providers are specializing with specific solutions.

The Cloud market will be worth $200bln by 202

A study presented by Synergy Research Group forecasts a growth in revenues from SaaS and Cloud services with an annual average rate of 23-29% for the next five years, reaching a net value of $200 billion by 2020. This will go along with an average annual growth of 11% in the sales of infrastructures to Cloud Providers on hyperscale.

The Public Cloud sector will experience the greatest growth -29% per year- followed by Private Cloud (26%) and SaaS (23%) services. The APAC area will be the region with the biggest growth, then EMEA and North America; the most interested areas will be about databases and IaaS/PaaS services oriented to the IoT.

CommScope to purchase Cable Exchange

CommScope announces its interest in purchasing Cable Exchange, a privately held quick-turn supplier of fiber optic and copper assemblies for data, voice and video communications

Cable Exchange, headquartered in Santa Ana, Calif., manufactures a variety of fiber optic and copper cables, trunks and related products used in high-capacity data centers and other business enterprise applications.The company, founded in 1986, specializes in quick-turn delivery of its infrastructure products to customers from its two U.S. manufacturing centers located in Santa Ana, Calif. and Pineville, N.C.

As more user-driven information and commerce flows through networks, operators are quickly deploying larger and more complex data centers to support growth in traffic and transactions.

Read more ...

- Details

-

Published: Thursday, 08 June 2017 15:11

-

Written by Riccardo Gallazzi

Gartner’s “Magic Quadrant” for digital commerce is available.

Gartner, world’s leading in IT consulting, research and analysis services, published its yearly “Magic Quadrant” for digital commerce. The report is available at this address.

Among the most interesting results, an expected yearly growth of 15% from 2015 to 2020 of the digital commerce platforms market, including licences, support and SaaS service; total expenditure will be $9,4 bln by 2020, with 53% coming from on-premises solutions.

Read more ...

- Details

-

Published: Thursday, 08 June 2017 15:11

-

Written by Veronica Morlacchi

No doubts about the direct civil liability of a Provider of illegal acts performed by the provider itself. But can we talk about provider’s civil responsibility also with regards of the diffusion, by means of its infrastructure, of illegal contents by part of third-parties? Drawing inspiration from a recent sentence of the Corte d’Appello di Roma (appeal court of Rome), in this article we will try to clear things on the civil responsibility of Cloud ISP.

In the IT language, the term Provider refers to an intermediary entity in communication that offers different services: for instance, access to the network with Internet - Network Provider; or access to Internet services - Internet Provider; Website hosting - Host Provider; and so forth. So, what is the responsibility of a Provider for such “mediatoring” activity? A recent sentence of the aforementioned Corte d’Appello di Roma, sentence n.2833 of April 29, 2017, stated declared the civil liability of a provider for any illegal act committed by third parties using the provided digital platform.

In particular, such third party illegally used and diffused with the platform provided by the provider, some TV shows whose rights holders sued the Provider for compensation.

Read more ...

- Details

-

Published: Monday, 10 April 2017 08:30

-

Written by Riccardo Gallazzi

Extreme Networks purchases Broadcom datacenter

Extreme Networks, a network solution company based in Jan José, California, is about to acquire Brocade’s datacenter for $55 mln (with additional bonuses for the following 5 years), as written in an official statement.

The acquisition will happen as soon as the Singaporean Broadcom will complete the $5,9 bld of Brocade. Brocade is a San José-based company that produces routers, switches and software solutions for datacenters, and owns Vyatta, the network devices OS at the basis of the one used by Ubiquity.

In the past months Extreme Networks purchased the LAN wireless market from Zebra Technology and is about to purchase the networking business of Avaya.

Read more ...

- Details

-

Published: Friday, 27 January 2017 15:21

-

Written by Veronica Morlacchi

CISPE has recently published the first Code of Conduct for Cloud infrastructure providers: it’s important to acknowledge its existence and its content for both clients interested in Cloud service (in the choice of the service) and for providers of such services (to evaluate whether to adhere to it).

In this new article of our column we cover the aspects about security and secrecy of data in Cloud services, also with regards to reserved business content and to industrial properties to safeguard. But the recent publication by CISPE of the first Code of Cloud Infrastructure Service Providers last 27 September lead us to a little detour from our usual routine. What is CISPE, if you don’t happen to know it? The acronym stands for Cloud Infrastructure Services Providers in Europe and it’s an alliance of circa twenty Cloud infrastructure providers operating in different European countries.

Read more ...

- Details

-

Published: Tuesday, 24 January 2017 16:15

-

Written by Riccardo Gallazzi

Amazon adds a Cloud region: Central Canada

Amazon announced at AWS Executive Insights a new AWS region called Central Canada with two Availability Zones, which adds to the regions already presents on the american soil: Northern Virginia, Ohio, Oregon, Northern California and AWS GovCloud, thus bringing to 15 the number or global regions with 40 Availability Zones.

Just last year AWS opened its first office in Canada, despite having tens of thousands of clients, and last august it added CloudFront sites in Toronto and Montreal to satisfy the request. The nes region will be hosted in Montreal data centers.

“For many years, we’ve had an enthusiastic base of customers in Canada choosing the AWS Cloud because it has more functionality than other cloud platforms, an extensive APN Partner and customer ecosystem, as well as unmatched maturity, security, and performance,” said Andy Jassy, CEO, AWS. “Our Canadian customers and APN Partners asked us to build AWS infrastructure in Canada, so they can run their mission-critical workloads and store sensitive data on AWS infrastructure located in Canada. A local AWS Region will serve as the foundation for new cloud initiatives in Canada that can transform business, customer experiences, and enhance the local economy.”

The new Central Canada is available for all AWS service including S3, EC2 and RDS. The first January 2017 a new AWS office in Dubai (United Arab Emirates) opened.

Read more ...

- Details

-

Published: Monday, 10 October 2016 12:22

-

Written by Veronica Morlacchi

Link to the previous article: Cloud: how to evaluate a contract - A lawyer's advice

If you are planning to use a Cloud service, then pay attention to certain aspects. In the previous issue we covered contractual clauses. Today we’ll deal about privacy defense.

Privacy and data protection by part of a Cloud Provider is one of the most delicate topic when agreeing on a contract. When choosing a cloud service, you authorize the provider to manage your data (your own or your clients’), in addition to let them on the provider’s infrastructure.Needless to say that it’s very important to understand, when choosing the provider, what kind of rules he’s subject to, what kind of guarantees he must provide and how he can manage your data, also on a privacy perspective.

A recent study conducted by ABI and CIPA called “Rilevazione sull’IT nel sistema bancario italiano – Il cloud e le banche. Stato dell’arte e prospettive” (“Identification of IT in the italian banking system - Cloud and banks. State of the art and perspective”), published in May 2016, revealed that the guarantees about privacy and data security are of fundamental importance by 100% of the interviewed (on par with experience in the sector, and much more than any other factor). On the other hand, only in half the cases banks have found a correspondece in the service offered by providers, which signals that the topic is important and requires an in-depth analysis.

Read more ...

- Details

-

Published: Monday, 08 August 2016 16:38

-

Written by Filippo Moriggia

Exclusive interview with Rodolfo Rotondo, VMware Senior Business Solution Strategist (EMEA).

While waiting for the next news at VMworld 2016 in the US (Las Vegas, 28 August to 1 September) and VMworld 201 Europe (Barcelona, 17-0 October), we have interviewed Rodolfo Rotondo, VMware spokesperson who has a relevant position on an EMEA level: Senior Business Solution Strategist.

VMware has always been a leader in innovation, what do you think about the future, where do you see a better development opportunity? What is the technology that, according to your opinion, is today more “on the edge” in your vast offerings catalog?

VMware’s started to innovate since the ‘00s with computer virtualization, continuing with software defined data-center, a term coined by VMware itself, extending virtualization to networks and storage, then creating a bridge between on-premises data-center and public clouds thus allowing both a bidirectional transfer of workloads and an unified management and common policies, enabling real hybrid cloud.

Read more ...

- Details

-

Published: Monday, 08 August 2016 16:35

-

Written by Lorenzo Bedin

We have analyzed stats from the main hosting providers and studied the most common solutions to publish a website built with Wordpress Here’s what we’ve discovered.

With this article we want to analyze the actual situation of the worldwide hosting market with a comparison of the big players of this sector. As it often happens in the business world, the firsts are the ones with the biggest share of the market.

The global podium

GoDaddy is the first worldwide Web Hosting Provider, covering 5,8% of the market, followed by Blue Host (2,5%) and Host Gator (2,1) at the the third step of the podium (source: Host Advice). Note how those three providers are from the US. Another important fact is the neat gap between the leading solution and the followers: GoDaddy outperforms the others by more than the double the adoption percentage. This rank also holds on a country level within the US.

The situation in single countries

GoDaddy dominates with 14% of the market, followed by Blue Host (6,5%) and Host Gator (5,4%). GoDaddy, both on a national and on a worldwide level, maintains the dominance with more than twice the percentage of the concurrent in the runner-up position. But despite this first analysis, the market in the US is quite open.

But where does Amazon, with its Web Services, fit in this rank? The Cloud colossus places fifth worldwide with 1,8% of the market, and fourth in the US (3,9%).

We can think about two big names in the Cloud sphere, as well: Microsoft and Google. As their offering are different than the ones we cover in this article, they aren’t kept into account in this rank about Web Hosting.

Read more ...

- Details

-

Published: Monday, 08 August 2016 16:18

-

Written by Veronica Morlacchi

It’s safe to know that using Cloud services might bring some problems. Let’s start to see together some contractual aspects we must take particular care.

Who means to use, for his own activity, whichever Internet service that belong to the definition of Cloud, faces several aspects: from the content of the contract and the management of data by the provider, to the loss of data transferred outside the walls on their security. Very interesting and interwoven profile, which require a meticulous reflection in particular from a legal standpoint.

The first important aspect is the contract you are about to stipulate with the cloud provider, the agreement that will regulate the relationship. It’s a contract that, in our code, doesn’t have a typical discipline in the civil code (codice civile) or in some special law: it’s an atypical contract and, because of that, you must read it carefully as it contains the primary regulamentation of the relationship and responsibilities. The most probable hypothesis is that you can choose between predefined contractual offerings: a cloud computing contract is usually defined by the provider according to standard contractual models (the so-called “general terms of contract”) which can be hardly negotiated.

Let’s see some of the main clause you must pay attention while choosing a provider and subscribing a contract.

Read more ...

- Details

-

Published: Wednesday, 29 June 2016 18:06

-

Written by Lorenzo Bedin

The term MSP is the acronym for Managed Service Provider and identifies a working method nowadays used by many companies in the IT world. In general, the idea of Managed Service implies an outsourcing approach of management and maintenance activities with the ultimate goal of optimizing the use of resources and lowering costs of the client. In this scenario, the Provider is the company or society that provides the service.

MSP key points

We can define three main points that characterize a Managed Service Provider (MSP onwards):

- unlimited phone/remote help desk;

- proactive management of the infrastructure: backup, security, updates, etc..;

- being a competent intermediary with additional providers and third parties.

Advantages and rates

Essentially a MSP contract is an “all-inclusive” contract with reciprocal advantages for both the provider and the client. In this way the client can have a fixed price for IT management, without worrying about any technical aspect or surprise about costs. Moreover, the client is aware the goal of the provide is the same of his own, ie to reduce down times and increase the overall productivity of the company.

Read more ...

- Details

-

Published: Wednesday, 29 June 2016 18:03

-

Written by Filippo Moriggia

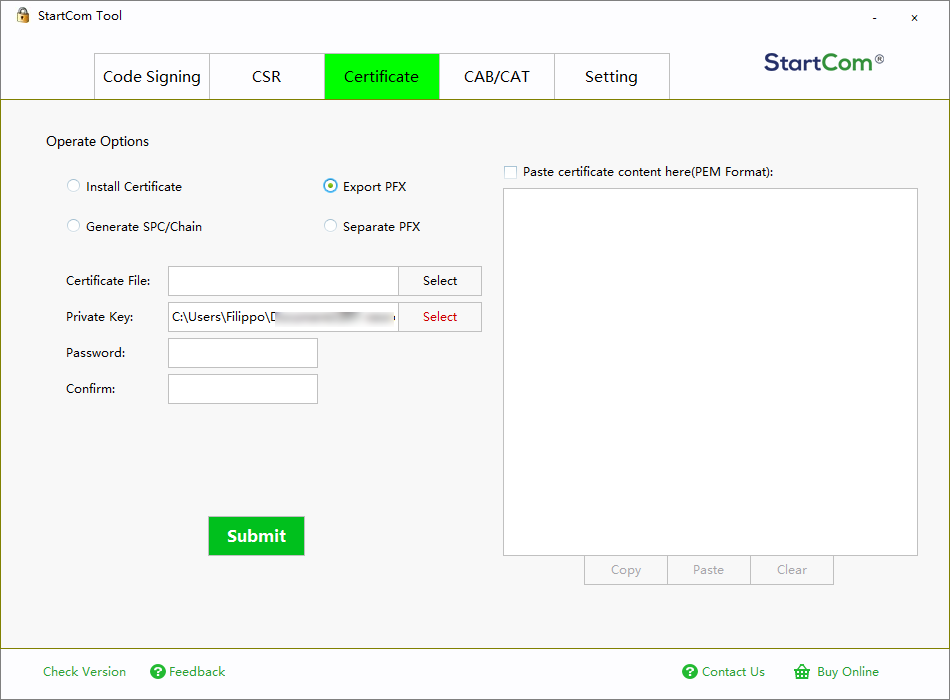

Here’s how to use SSL/TLS, S/Mime, certificates and public key cryptography in order to make Web services and email communications safer

Despite several technologies related to SSL/TLS protocols being attacked in the last 3 years, a good sysadmin or IT manager in 2016 can’t tolerate its organization still uses systems that transmit information in clear.

The use of Transport Layer Security (and its predecessor SSL) -it should be clear- it not enough to guarantee confidentiality of transmitted data on the Internet, and a quick bibliographic research shows the vast method of attacks to such protocols (like in this case and this other). TLS represents a first, fundamental security level for transmitted data, both for the access of a Web service of your company and the access to email via Webmail or IMAP/POP3/Exchange.

TLS acts as a tunnel and doesn’t modify the ongoing communication between client and server: it only adds a layer, indeed, that ciphers data between the source and the destination by using some key concepts at the basis of public key cryptography. Who has a server role needs a certificate, released by a certification authority or, in the worst case, self-signed: only who has purchased the certificate owns the private key that allows to encrypt the communication.

Read more ...

- Details

-

Published: Monday, 18 April 2016 16:19

-

Written by Lorenzo Bedin

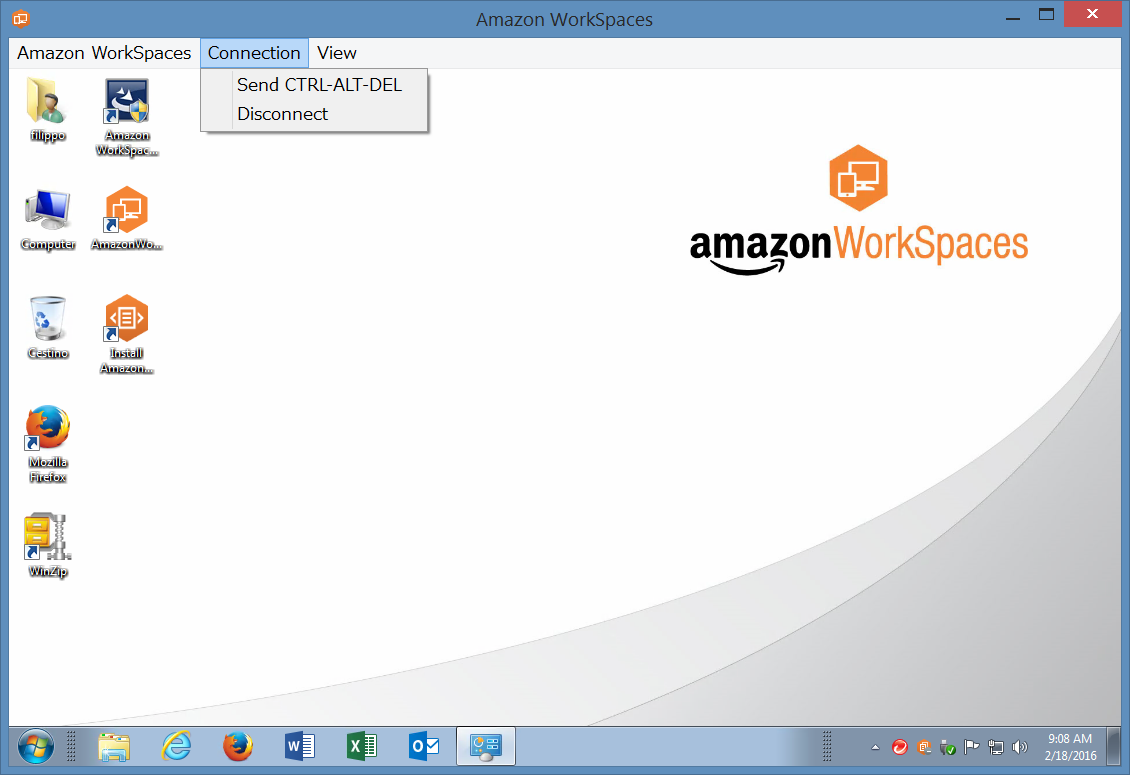

We’ve tried the Virtual Desktop Infrastructure (VDI) as-a-Service system offered by the American colossus. A few clicks of the mouse are needed to create and distribute virtual desktops that are complete and accessible anywhere.

VDI systems are'n any news in the IT scenario, just think about Citrix XenApp or less know but quickly evolving systems such as VMware Horizon. The real difference in terms of performances and availability, in particular for SMB, can be experienced only by switching to a Cloud-based solution. Choosing a Cloud Provider with an “as-a-Service” approach means not having to deal with any hardware and software infrastructure anymore: the only requirements are an Internet access and whichever terminal, a tablet or even an old PC with Linux or an employee’s laptop -that’s a BYOD logic- and you’ll be ready to offer an updated, complete and always available business desktop.

It’s worth to point out two main limits in the adoption of these solutions: one is about software, the other’s about hardware. The former implies software licenses management: Microsoft has never been friendly in terms of VDI solutions and probably it’s easier to find systems where licensing has been interpreted or configured in a wrong way, even though all the other things have been properly done.

The second limitation is about guaranteeing good performances to users’ desktops in every situation: it’s not an easy task to implement a system (hardware, software, hypervisor and so forth) that can provide enough resources in every situation, in particular for small System Integrators or less experienced Cloud Providers.

Read more ...

- Details

-

Published: Thursday, 11 February 2016 15:34

-

Written by Lino Telera

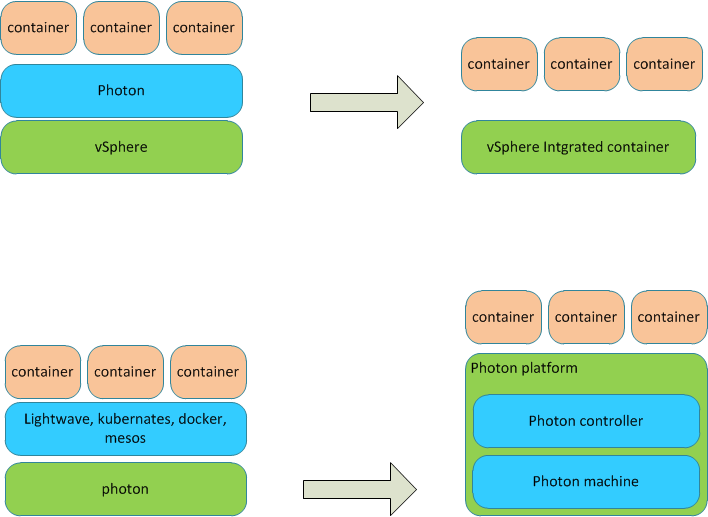

In the previous articles (Part 1 and Part 2) we’ve talked about Photon and Docker are a new way to developed and put new generation applications into production. Indeed, containers and “microservice” development techniques are the architectural and cultural elements that determine a significant shift in the way of managing cloud-native applications within their life cycle. As cloud-native applications is a very hot topic in the IT world, it’s easy to find on the Web new announces and information that can make obsolete some elements covered in the previous articles.

As a matter of fact, the announcement of the devbox based on Photon Controller and the implementation of new clustering mechanisms in containers are now available to the community world by means of the technology preview channel of VMware.

Read more ...

- Details

-

Published: Friday, 05 February 2016 17:14

-

Written by Lino Telera

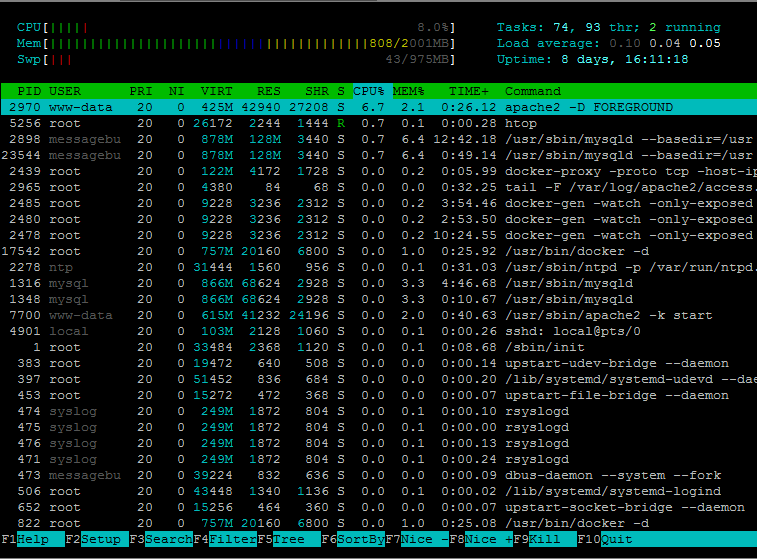

Articles of the VPS column:

Linux is a system equipped with countless diagnostic tools that can be used with a command line interface (CLI) allowing sysadmins to identify and solve problems and malfunctionings. Regardless of the typical limitations of a textual environments, these supporting programs are really powerful as they can be invoked by simple commands or placed into automated scripts. In this article we’ll cover some of the most common: top, ps and netstat.

top and htop: processes, workload and memory under control

top is the command used to identify processes, a clear indicator of the system health state and allows to check parameters such as CPU and memory usage percentage in addition to the actual state of active processes.

Read more ...

- Details

-

Published: Tuesday, 19 January 2016 23:20

-

Written by Giuseppe Paternò

Earlier in this publication I mentioned that the promise of OpenStack is the interoperability among different components from different vendors or open source projects. As a matter of fact, each of the components described in the previous page can be easily replaced with projects or products from each vendor.

At the time of writing, the only project that has no valuable alternative among vendors is Keystone. Keystone acts as a service registry and user repository, therefore plays an important role in OpenStack. While it was conceived to have internal users like Amazon does, the development is shifting towards an HTTP interface to existing identity systems, such as LDAP or SAML.

Also Horizon, the web dashboard, has few chances to be replaced, as its colors and logos can easily be customized to be adapted for everyone. Some other dashboards exist for OpenStack, but usually the company who needs a different web interface goes for a customized development on top of the OpenStack APIs.

Projects in which it makes sense to adopt a plugin approach are Nova, Neutron, Swift and Cinder. Let us review in a table what are the most relevant open source and proprietary technology for each component (please keep in mind that this list can vary).

Nova

| Open Source | Proprietary |

|---|

| KVM | VMWare ESX/ESXi |

| XenServer | Microsoft Hyper-V |

| LXC | |

| Docker | |

Cinder

| Open Source | Proprietary |

|---|

| LVM | NetApp |

| Ceph | IBM (Storwize family/SVC, XIV) |

| Gluster | Nexenta |

| NFS (any compatible) | SolidFire |

| HP LeftHand/3PAR/MSA |

| Dell EqualLogic/Storage Center |

| EMC VNX/XtremIO |

Neutron

| Open Source | Proprietary |

|---|

| Linux Bridge | VMWare NSX |

| Open vSwitch | Brocade |

| Midonet | Big Switch |

| OpenContrail (Juniper OpenSource) | Alcatel Nuage |

| Cisco Nexus |

Swift

| Open Source | Proprietary |

|---|

| Swift project | EMC Isilon OneFS |

| Ceph | NetApp E-Series |

| Gluster | Nexenta |

| Hadoop with SwiftFS/Sahara | |

- Details

-

Published: Tuesday, 19 January 2016 23:10

-

Written by Giuseppe Paternò

So you understood OpenStack, its components and how applications play an important role in the cloud. Before revealing to you how to be successful with OpenStack, there is another important piece I want you to understand.

The next marketing buzzword that everybody mentions nowadays is DevOps. As you might understand, DevOps is an acronym that stands for "Development" and "Operations".

The goal of DevOps is to improve service delivery agility, promoting communication, collaboration and integration between software developers and IT operations. Rather than seeing these two groups as silos who pass things along but do not really work together, DevOps recognizes the interdependence of software development and IT operations.

In an ideal world, through the use of continuous integration tools and automated tests, a group of developers could bring a new application on-line without any operations team. For example, Flickr developed a DevOps approach to support a business requirement of ten deployments per day. Just for your own information, this kind of approach is also referred as continuous deployment or continuous delivery.

Discussing development and agile methodologies is not within the scope of this publication, but this is one thing you have to understand and keep in mind, no matter if you are an IT manager, developer or system administrator.

If you decided to embrace the cloud in full and you are thinking of adapting your application to take advantage of it, then every single aspect of IT have to be carefully analyzed. Development, whether you do it internally or outsourced, must be taken into consideration. Also the way your company has been organized has to change: did I mention before Cloud is a huge shift?

- Details

-

Published: Tuesday, 19 January 2016 23:10

-

Written by Giuseppe Paternò

So you have read about OpenStack and you are really eager to implement it. But let us step back and understand why you are willing to embrace the cloud. You might think of several reasons, but -- judging by my experience -- everything comes down to two root causes:

- You are looking to take advantage of the fast provisioning of the infrastructure, either for savings, speed, or both

- Your applications may have varying demand patterns, resulting in the need of increased computing power during some periods.You may want to take advantage of the scaling capabilities of Cloud to fire up new instances of key modules at peak periods, shutting them down when not needed, freeing up infrastructure resources for other tasks and reducing the TCO

Most of the customers just want a fast provisioning mechanism of the infrastructure. Do not get me wrong, this is perfectly fine and OpenStack gets the job done.

But you will get the full benefit of the cloud when you'll have an application that might be in need of resources on-demand. Think about a sports news portal when the World Cup is on, the invoicing and billing at the end of the month or a surge in the need to process a data from devices.

Would it not be nice, given the detected increasing loads, to have the application scale automatically to cope with the requests? Believe it or not, it is not magic and it is totally feasible. Netflix did it and I can name a lot of other SaaS systems that are doing it. There is only one constraint: you have to be in control of the source code of your applications. If you bought your application “as is”, contact your vendor, but there are few chances that you can follow this pattern.

In case you have the source code, you can adapt your application to take full advantage of your new environment. In this scenario, you will have to intervene more into your code as you will need to ensure that the application can take full advantage of the environment, reconfiguring load balancers, dynamically allocating resources and etc. There are some “tricks” that an application has to adopt to be “cloudish”, but is outside of the scope of this publication.

It’s quite common that a customer might decide to have a phased approach to the cloud, starting to take advantage of the fast provisioning and then transforming the application to adapt it to the cloud. The cloud is a long journey and it can be successful, are you ready for it?