When someone talks about server virtualization, one immediately thinks to VMware vSphere and, perhaps, to Microsoft Hyper-V or to Citrix XenServer or Red Hat Virtualization (KVM).

Proxmox Virtual Environment (VE) is an less known and titled alternative, but still valid and original.

UPDATE: on the 12th of December 2015, after our test, the version 4.1 has been released, based on the latest Debian Jessie with Kernel 4.2.6, LXC and QEMU 2.4.1. Some bugs have been solved as well as the integration with ZFS, several functions about LXC containers.

Proxmox is an open source project based on KVM and -starting from the new 4.0 version- on LXC (Linux Containers): it’s free but the company that develops it offers a paid commercial support.

It’s a Debian-based hypervisor that uses a modified version of the Red Hat Enterprise Linux (RHEL) kernel and it’s available as an ISO image to be bare-metal installed on a physical host.

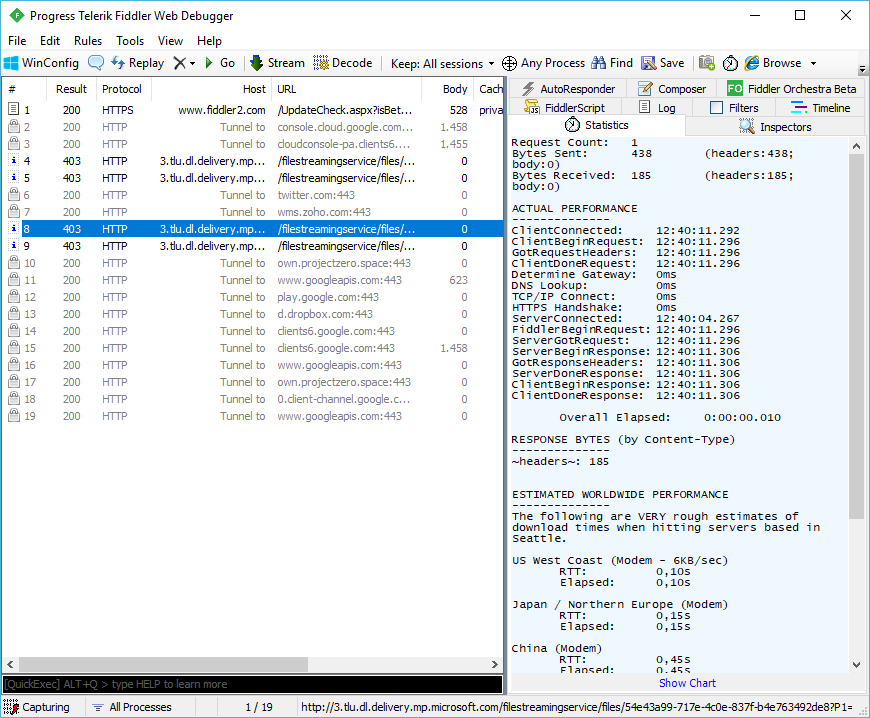

The management interface is Web based only and doesn’t require a server or a VM dedicated to the management. As the vast majority of Linux-based products, sometimes the use of the command line is needed to perform some advanced operations. The available documentation is scarce but there are some detailed guides with all the main operations on the dedicated Wiki, however the levels is quite far from those of projects like vSphere or Hyper-V.

Proxmox has two different virtual machines (VM) typologies: Full Virtualization with the use of KVM (Kernel-Based Virtual Machine) and Container-based.

KVM VMs are the classic autonomous VMs with dedicated operating system and resources, whereas the use of Containers allows to implement a virtualization on an operating system offering several isolated Linux systems on a single host.

Starting with the release 4.0, Containers are managed using LXC instead of OpenVZ that has been abandoned.

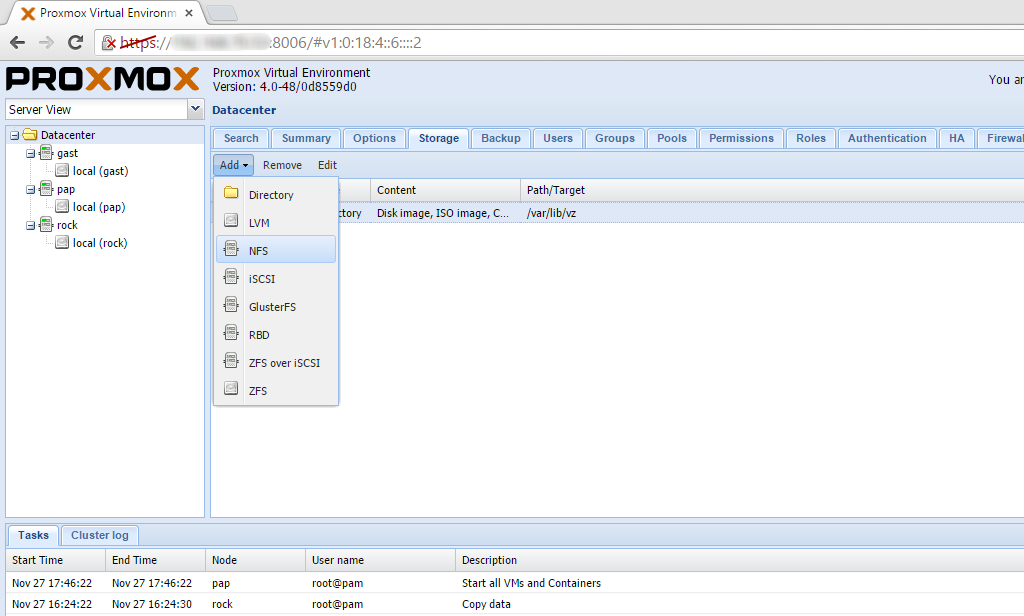

As the best IaaS platforms do, Proxmox allows the creation of multi-nodes clusters to implement High Availability -HA-: this feature, for instance, is managed by means of the Web interface but it has to be set with the command line. The multi-master architecture makes it possible not to have nodes dedicated to the centralized management exclusively, thus offering a saving in terms of resources and avoiding the presence of a Single Point of Failure (SPOF). Indeed, when accessing one of the hosts with the Web client, it’s possible to see and manage all the other hosts inside that very same cluster. With a configuration of such kind, we can also implement HA on a singular basis for both VMs and Containers, provided there’s a dedicated shared storage. Similarly to vSphere, Proxmox supports local disks (with LVM or ZFS file systems), network storage through NFS, iSCSI and network file systems like GlusterFS. When configuring a cluster, it’s remarkable to note that the Ceph file system can be used for a shared storage; Ceph however requires a dedicated management server (although it can be installed on a node of the cluster in small environments).

The platform supports multiple snapshots and includes a native backup function with schedule capability, for each single VM. Live migration is not missing as well: you can migrate a VM without downtime even to different storages (to be clear, the equivalent of vMotion and Storage vMotion with VMware).

Networking is structured on the bridged model, like vSphere; a bridge is the software equivalent of a physical switch where different virtual machines can be connected to and share the communication channel. Each host supports up to 4096 bridges and the access to the external world is guaranteed by associating bridges to physical network ports on the host. Network management can be performed with the dedicated voice in the menu of the Web interface.

Installation and usage

The installation procedure is quite simple, during the the process we can choose between the default settings and custom settings like local storage configuration with the ZFS file system (this solution is useful when we prefer a software-based disk management instead of an hardware RAID with the physical controller). The addition of network storage (in our case, NFS) is done with a few steps, thus making it visible to the single host or to the whole cluster.

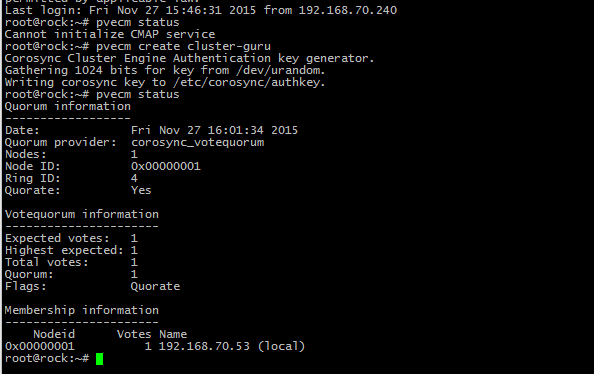

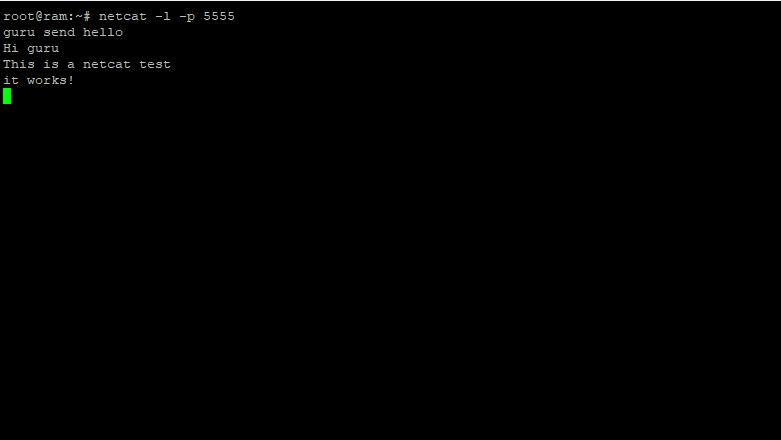

Once the Proxmox setup is done on each node, we created a cluster, a requirement for HA. This feature is implemented via command line on each node; once the cluster is created on the first node, all you need is to ‘’add’’ each other host and watch them to show in the left column of the Web interface. Contrary to what it may be obvious, there are just two simple command for these 2 operations: pvecm create [cluster-name] (to create the cluster on whichever node) and pvecm add [cluster-IP-address] (to add nodes to the cluster - the following image shows the procedure).

As previously hinted, the multi-master nature of Proxmox allows for a completely decentralized management, indeed we can perform actions on the various hosts in the cluster in the same way regardless of which one we are connected to.

Those who are familiar with other IaaS platforms like vSPhere, the creation of virtual machines is structured in a similar way: we can assign resources (disk, RAM, network cards, etc..) and link the VM to an ISO of the operating system to install. Direct connection to the VMs is possible thanks to the HTML5 console ‘noVNC’ (or SPICE as an alternative) included, or integrated in the web interface that opens in an autonomous window.

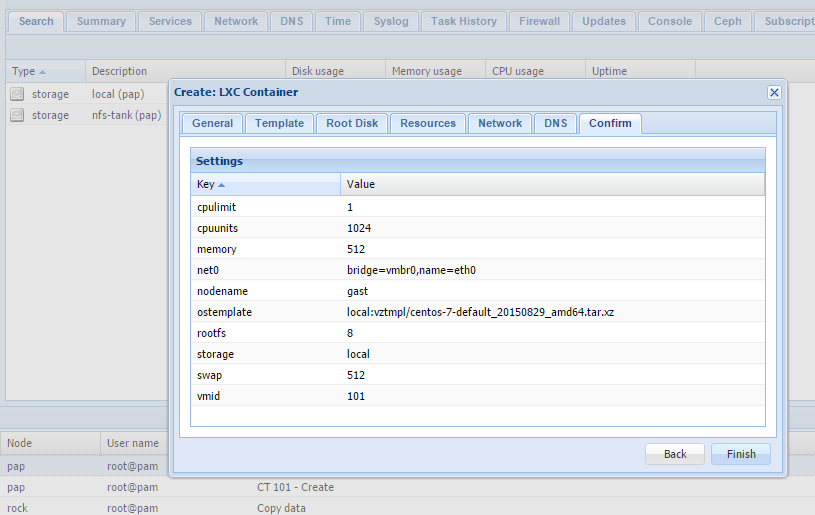

As far as Containers are concerned, their creation is fundamentally different when assigning the desired operating system. Because of the nature of Containers themselves, only the part relative to network configuration, DNS and hardware resources is like in classic VMs, but instead of a bootable operating system we need to provide Proxmox with a dedicated Template. Templates are pre-configured operating system images that can be downloaded straight from the main management interface ready to be implemented in a Container. Most of the documentation regarding this topic still refers to OpenVZ templates (for which a dedicated repository was available); with the introduction of LXC, the download is made directly from the host storage management page with the ‘Template’ button, which shows the ones available at the moment for LXC (Ubuntu 14.04, Arch-base, CentOS 6, Debian 7.0 etc).

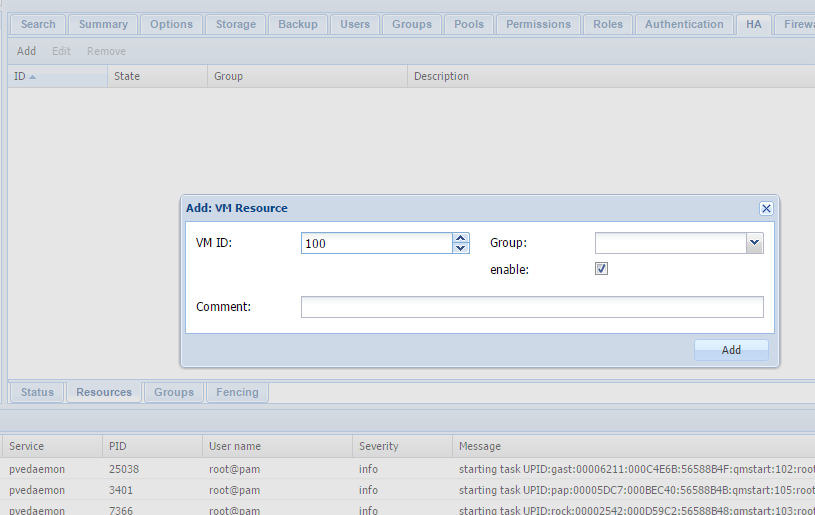

When using HA it's necessary to tell Proxmox which virtual machines are included; a voice in the dedicated menu is available in the Web management console, but, of course, there’s a dedicated command line command. Proxmox offers an HA simulator, a feature that allows to test high availability inside a simulated environment.

Our test

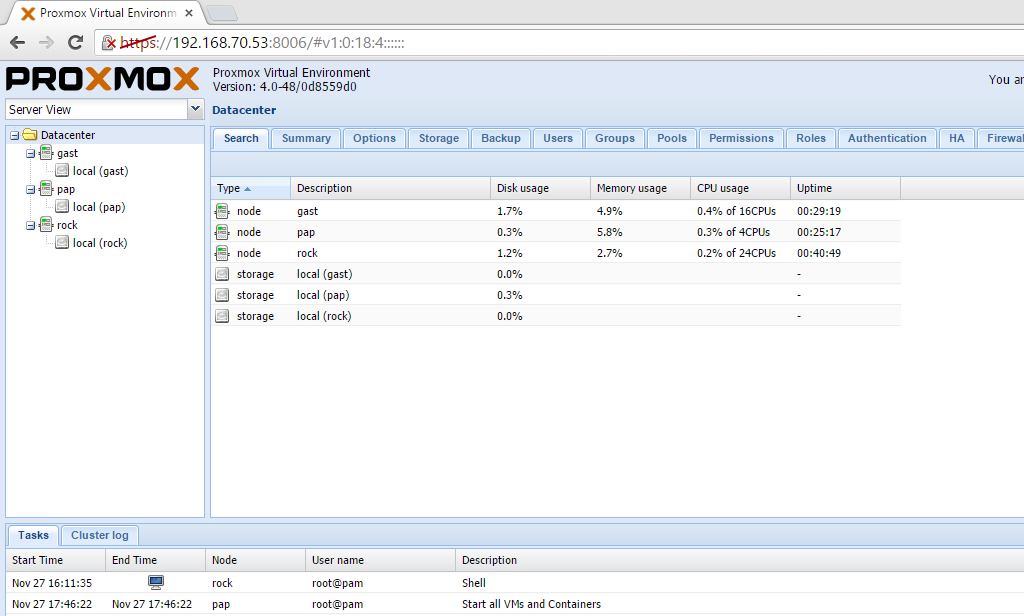

Our testing lab was made configuring a cluster of three HP servers (different generations, Proliant DL360 and DL380), connected to the same subnet and equipped with local storage onto which Proxmox was installed (installation on SD cards or USB pendrives is supported in test labs only, not in production environments).

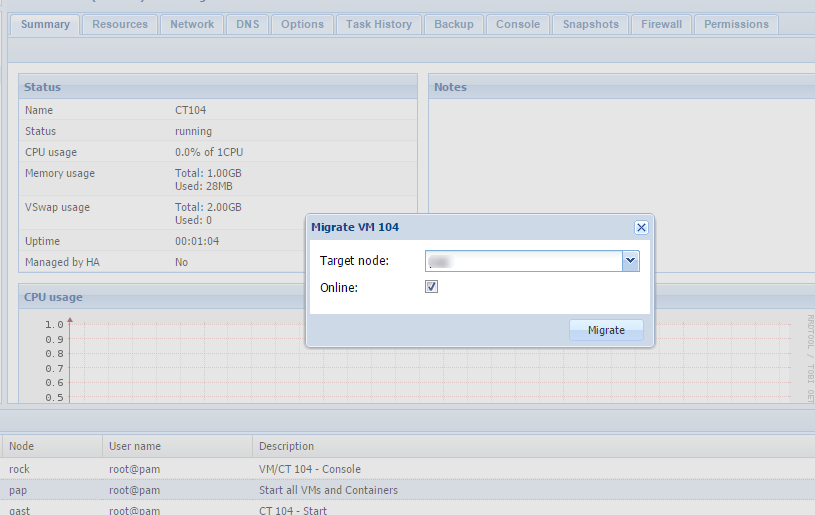

Two of the nodes have been configured with the standard procedure without using any advanced configuration; on the third server we tried to use local storage using the ZFS file system without any installation problem. Apart from the different disk management between hardware RAID via controller and software RAID with ZFS, the use of the memorization space available on the node resulted completely transparent. The use of virtual machines is comparable to offers of the more famous solutions: the noVNC console allow to interact in a relatively comfortable way even with desktop system. Access with SSH or Web interface to the VM is completely transparent with respect to the hypervisor. We also tried without any problem cloning and template creation. The following migration of virtual machines and disks from one host to the other raised no problems at all, and using the Web interface the procedure is pretty easy as it just asks to indicate the destination host (in case of disk migration we can also specify whether to keep or remove the original disk).

Container management is slightly more articulated: the shift from OpenVZ to LXC has made necessary the conversion of the ones previously created (a dedicated guide is available on the Wiki), furthermore, the offline migration has been introduced only with the release 4.0 beta2. Although Proxmox best practices ask for a dedicated network port (and related virtual networking) for VMs and Containers migration, we carried out our tests using one single physical port on the host, on which the virtual configuration was mapped to.

We’d like to signal a bug we’ve found when testing HA, still using Enterprise level HP servers (onto which we have tried several ESXi version -up to the latest 6.0- without any compatibility problem).

A Linux bug in the connection between the system and the iLO (the management service of HP servers), needed to check the current state of the physical host and identify potential migration situations, crashes the nodes of the cluster on which the HA feature is activated upon a VM (specifically, a kernel panic due to the hpwdt module). Fixing this issue requires to disable the watchdog service.

Why choosing Proxmox

Proxmox is a free solution and quite complete for the management of virtual infrastructures in small and medium dimension environments, but before jumping into this project it’s good to ponder upon certain side effects. The main deficiency is the lack of a vast and complete ecosystem of softwares, services, documentation and support for the whole platform, unlike with Microsoft an VMware, as well as the high reliability level required in production environments, perhaps with big dimensions.

The bug we found during the configuration of High Availability is an example that made us to reflect on the possible consequences of the adoption of this solution in critical systems.

Considering the recent, and quite pronounced, trend of the IT market towards Containers, Proxmox is a great starting point to get acquainted to the topic. Indeed starting to work with this method of virtualization is really simple and intuitive, even without an in-depth study about it and a dedicated infrastructure.

Proxmox is a project rich of potentialities and with a wide margin of improvement for sure, however we’d like you carefully ponder about all the possible implications before adopting it in long-term projects, or in projects that require a greater level of durability and reliability.