Previous article -> An introduction to Docker pt.2

The Docker introduction series continues with a new article dedicated to two fundamental elements of a containers ecosystem: volumes and connectivity.

That is, how to let two containers communicate with each others and how to manage data on a certain folder on the host.

Storage: volumes and bind-mounts

Files created within a container are stored on a layer that can be written by the container itself with some significant consequences:

- data don’t survive a reboot or the destruction of the container.

- data can hardly be brought outside the container if used by processes.

- the aforementioned layer is strictly tied to the host where the container runs, and it can’t be moved between hosts.

- this layer requires a dedicated driver which as an impact on performances.

Docker addresses these problems by allowing containers to perform I/O operations directly on the host with volumes and bind-mounts.

Volumes are managed by Docker itself and don’t depend upon the host structure, thus being easier to migrate and be backed up, and are managed via CLI with Docker APIs. They can be shared safely between different containers, be pre-populated by other containers. Certain drivers offer encryption, Cloud restore and and extension of features.

Another interesting use case of volumes is to perform backup and restore: as seen in the previous article, one of the main (if not the principal) characteristics of Docker containers is being ephemeral, so their structure can be recreated easily; but ant data created and modified is excluded to that. The chance to manage independent volumes enables a backups and restore solution, with the creation of a .tar archive of the content and the extraction of the archive on the new container.

Generally they are stored on the host in /var/lib/docker/volumes, and other processes can’t use them.

docker volume create nome-volume

After creating the container, it must be associate to the container with the --volume (-v) flag or --mount; Docker advices the use of the latter sintax.

Commands are of the likes:

docker run -d --name test-apache --mount source=nome-volume,target=/data apache

or

run -d --name test-apache --volume volume-name:/data apache.

In both cases the volume-name volume has been mounted in the container’s /data path.

docker volume ls lists all mapped volumes, which can be inspected with the exec command (which allows to run commands on the container as it were in a shell, see the previous article), for instance

docker container exec nome-container ls -halt /data

Bind-mounts, unlike volumes, are stored in any point of the host and can be accessed by any process; they can point to directories (absolute path), files and system configuration that can be, therefore, modified by the container: this situation must be evaluated properly not to create security issues.

To create a bind mount, use the --volume or --mount flag when creating the container. In both cases the host path must be specified:

--volume /var/www/html:/data:ro

or

--mount type=bind,source=/var/www/html,target=/data,readonly

Note how the --volume flag has a triplet separated by a colon (host path, container path and optional instructions as read-only mode), while the other flag is clearer and more specific. Docker advices to use the --mount flag, similarly to the volumes case.

There’s another kind of storage: tmpfs mounts. They are useful where you want no data to remain on the host or inside the container fro security or performances purposes.

Tmpfs mounts are supported by Linux only and are created outside of the container’s writing layer, so they are temporary and don’t survive a reboot or destruction of the container.

A data space like this is created with the --tmpfs (no other parameter is accepted) or with the --mount flag (which accepts indications regarding size and permissions):

docker run -d -it --name container-tmp --mount type=tmpfs,tmpfs-size=1G,tmpfs-mode=1777,destination=/data apache

or

docker run -d -it --name container-tmp --tmpfs /data apache

If you decide to use a storage space inside the writing layer (ie if you want to keep data inside the container), then you need to use a driver that allows the container to talk directly to the underlying layer; Docker provides dedicated drivers for filesystems like ext4, btrfs and zfs.

The choice of leaving data directly on the container is not optimal but is required in some cases, in particular with specific workloads: keep in mind that such data don’t survive a reboot or the destruction of the container and writing and reading performances are generally mediocre.

Docker supports blocks, object and file storage; the choice of the storage is done according the the use destination of the container.

Connectivity

Like in any information system, the topic regarding connectivity has a certain impact, in particular when talking about a technology, like Docker, which enables the so-called “micro services” architecture and highly-fragmented systems where each single entity (the container) must be correctly connected.

Docker supports container-to-container and container-to-host networks managed with dedicated drivers, which enable the bridge, host, overlay, macvlan and none modes; third-party plugins, like Weave, are available.

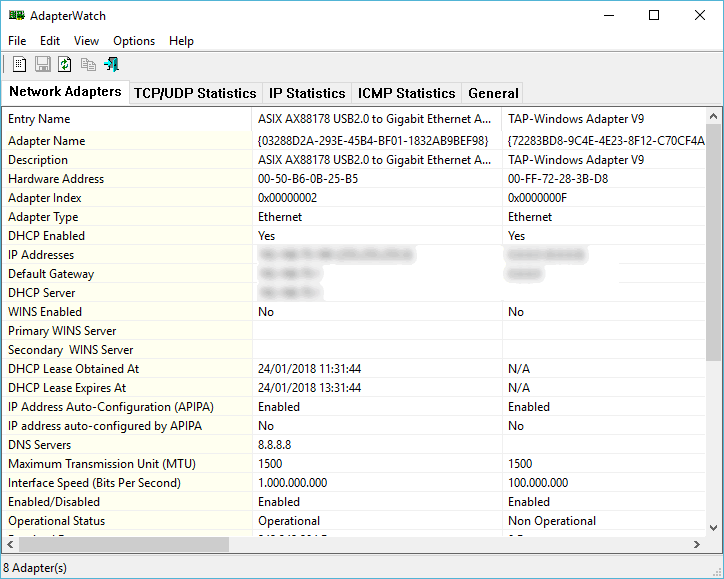

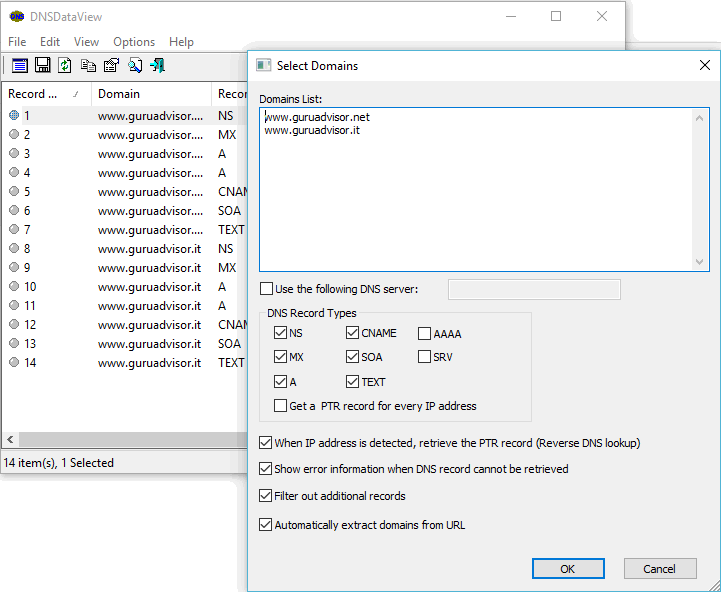

A container doesn’t use an emulated network card like a VM, instead it uses the physical or virtual interfaces of the host, like eth0; said drivers rule how host and container communicate. From an internal standpoint, the containers sees a complete network stack: IP address, subnet, gateway, VLAN, routing table, DNS service, etc.. . The IP address is given by the Docker daemon (which, as it picks it from a pool of available addresses, acts as a DHCP for its containers), while the hostname is set by the container name (and it can be resolved). DNS settings are inherited by the host, but they can be customized with flags when creating the network.

The host mode is the easiest to configure (no specific configuration is required and no traffic operation are performed) and the most intuitive: there is no separation between host and container as the latter uses the physical (or virtual) host NIC, so it uses the same IP of the host and, most important, belongs to the same port space. In other words, you can’t have two containers on the same host using the same TCP port.

A command like the following creates a container with host connectivity:

docker run -d --name apache --network=host apache

The bridge mode overcomes the difficulties about ports usage by creating a private network namespace internal to the host (so without the implications about security of the host mode), therefore the container is assigned with its own IP address and all ports. Then, iptables NATs (Network Address Translation) the mapping between host and container. This has a drawback on pure performances.

This way you can create, for instance, two containers with Apache listening on port 80 or 443 with their own IP address that are mapped on two different ports on the host. The access from the outside will simply be server-ip:port-1 and server-ip:port-2 respectively.

A command like docker run -d --name apache -p 8080:80 apache creates a container with bridge connectivity and with port 80 mapped on port 8080 of the host (port mapping works as host:container). The --net=bridge parameter shall not be specified as it’s the default one and the predefined host bridge is used.

The docker network create --driver bridge -name network-name creates an user-defined bridge network which resolves container names to IPs correctly thanks to the “automatic service discovery” service.

Containers on different hosts can’t communicate with host or bridge modes (which manage containers within the same host: in essence, the Linux kernel does most of the job). The overlay mode is required and enables the management of a few nodes or big systems managed with Kubernetes or Docker Swarm orchestration.

A small configuration job is required on each interested host, in particular some TCP and UDP ports must be open for the communication of intra-cluster management, between nodes and for the overlay network, and it must be added to a swarm or multi-swarm (we’ll cover this topic in an upcoming article: for now, you just need to know that is a Docker way of managing a nodes cluster).

This is the network used in clusters and the most used, due to the popularity of orchestration solutions. In this case too a container:host port mapping can be assigned.

The command docker network create --opt encrypted --driver overlay --attachable network-name creates an overlay network that can be used by the members of the swarm and with encrypted traffic - both data and management traffic.

The macvlan mode lets the container talk directly with the physical network card and allows to assign a MAC address to virtual NICs, so they appear as real physical interfaces connected on the network: containers appears with their own IP address on the same subnet of the host and can communicate with external resources without port mapping and NAT. VLAN of the physical NIC can be used as well.

A network card interface with promiscuous mode (ie support to several different MAC addresses) is required on the physical host, with subnet and gateway assigned.

This mode is quite useful when you expect to use components that require a MAC address like monitoring tools; the command docker container inspect container-name shows the configuration of the container and the Network part has the information regarding the MAC address.

Similarly, this information can be retrieved with commands like docker exec container-name ip addr show or docker exec container-name ip route.

The following command creates a Macvlan network on the eth0 NIC with VLAN 90:

docker network create --driver macvlan --subnet=192.168.0.0/24 --gateway=192.168.0.1 -o parent=eth0.90 nome-rete

Lastly, the “none” mode: as the name suggests, with this driver the container receives a network stack but no external interface (but a loopback).

This mode is useful for testing purposes, containers that will receive a connection thereafter and, naturally, containers that don’t need a connectivity.

So far, so good: we should have a good basic knowledge of Docker containers which will help us to understand how to manage hosts and containers with the proper tools. In the upcoming issues.