When purchasing archiving devices it’s often hard to understand the differences between disks and SSD, Sata or Sas, between consumer, Nas or Enterprise tier products: the differences in terms of performances don’t justify the higher price in general. Let’s try to understand what changes in terms of reliability and technology.

All you need is going to the website of whatever hardware manufacturer’s site and browse the “disks” section to find -even still considering a single manufacturer!- an extremely wide device offering that goes well beyond the combination of SSD and traditional disks, and consumer or server/Enterprise tier product.

With that in mind, the simple distinction between Sata and Sas connections is not enough to justify the large variety. Models can differ both internally and from a connection perspective, as well from a usage perspective.

Let’s start to make a few distinctions by concentrating on 3,5” traditional disks with 7.200rpm speed. We can clearly identify at least 4 disk topologies:

-

SATA Consumer (ad esempio Seagate Desktop HDD, WD Blue, WD Green), 5 days a week, 8 hours a day, use

-

SATA uso NAS (Seagate NAS HDD, WD Red) 24h use but limited data transfer

-

SATA uso NAS aziendale (Seagate Enterprise NAS HDD, WD Red Pro) 24h use with intensive data transfer

-

SAS Enterprise (WD RE SAS) for server use

We’ve already simplified things a little bit in this list because several producers have introduced some variations and lots of different models. Moreover, if we take into account the server world too, then every manufacturer (HP, Dell, Lenovo, Fujitsu, etc..) has its models made perhaps by the aforementioned brands but with some modifications and adapted with some proprietary firmware.

In this article we’d like to focus on data integrity, trying to understand what really changes with each of these disks when the main goal is the conservation of data.

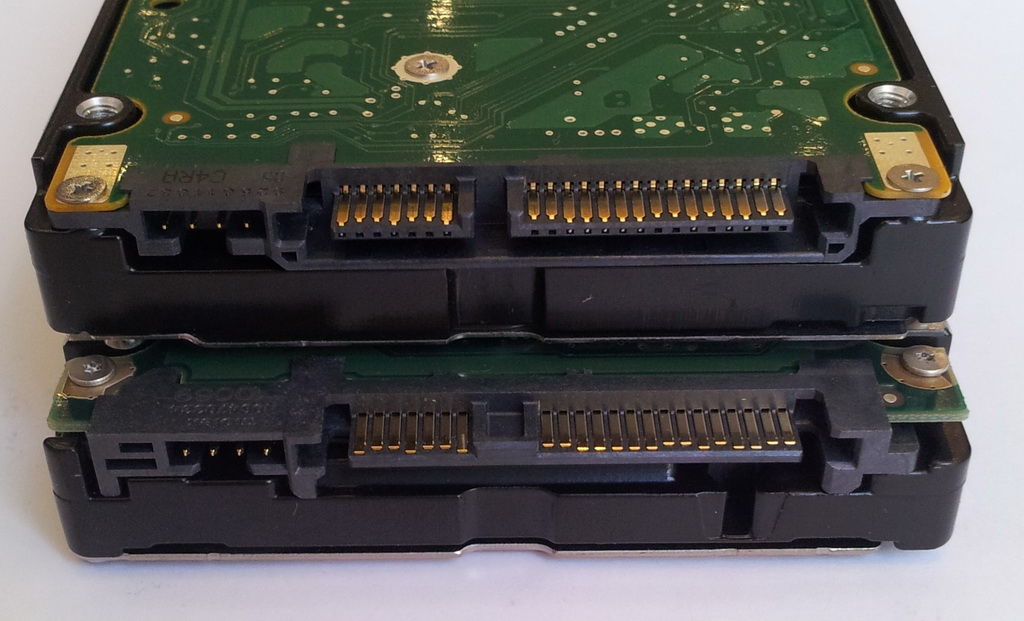

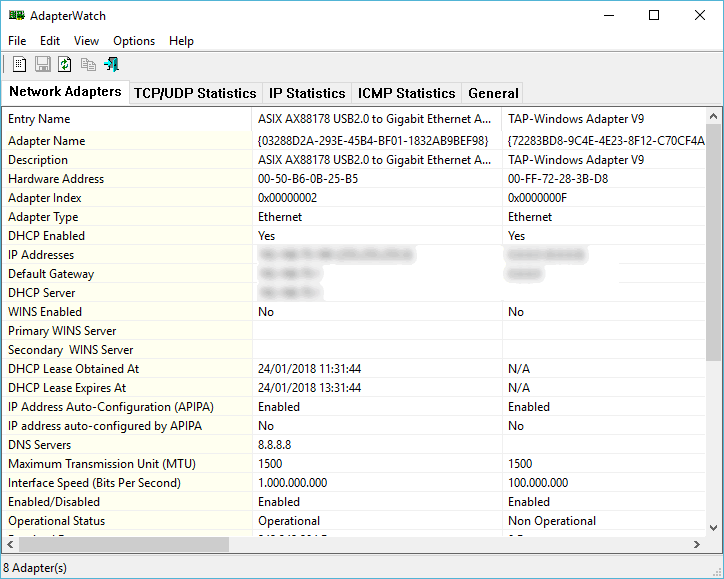

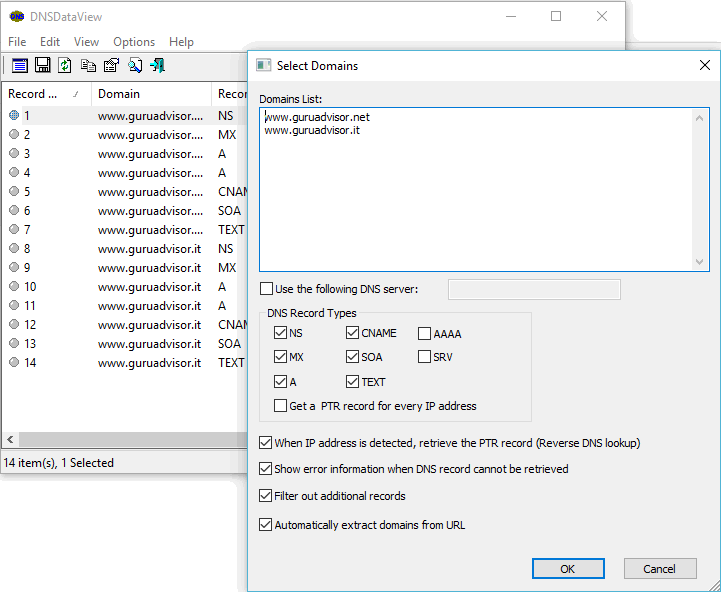

Sas and Sata: disks and connections

A disk with Sata connection, above, and with Sas connection, below. Disks have the same form factor, but different connections.

It’s important to understand that Sas and Sata represent two separate aspects inside the same scenario. Often times we identify specific disks with well defined characteristics like Sas disks or Sata disks. However this is true up to a point as the disk itself, in theory, is not very different; what changes is the communication channel, the interface and the commands used.

For instance Western Digital’s RE line has both Sata and Sas models, even though with slightly different parameters.

The most important parameter to evaluate the reliability of a storage system is Hard Error Rate, that is, a number provider by the producer that indicates the average amount of bits read before encountering an hardware error in the reading of a sector. Every disk, sooner or later,will have a problem in the reading of a certain sector due to wear, demagnetization or other causes. The more advanced disks are built in such a way that this kind of error is less probable; an Hard Error Read makes impossible to access all the data stored in that specific sector (typically a 4 Kbyte block).

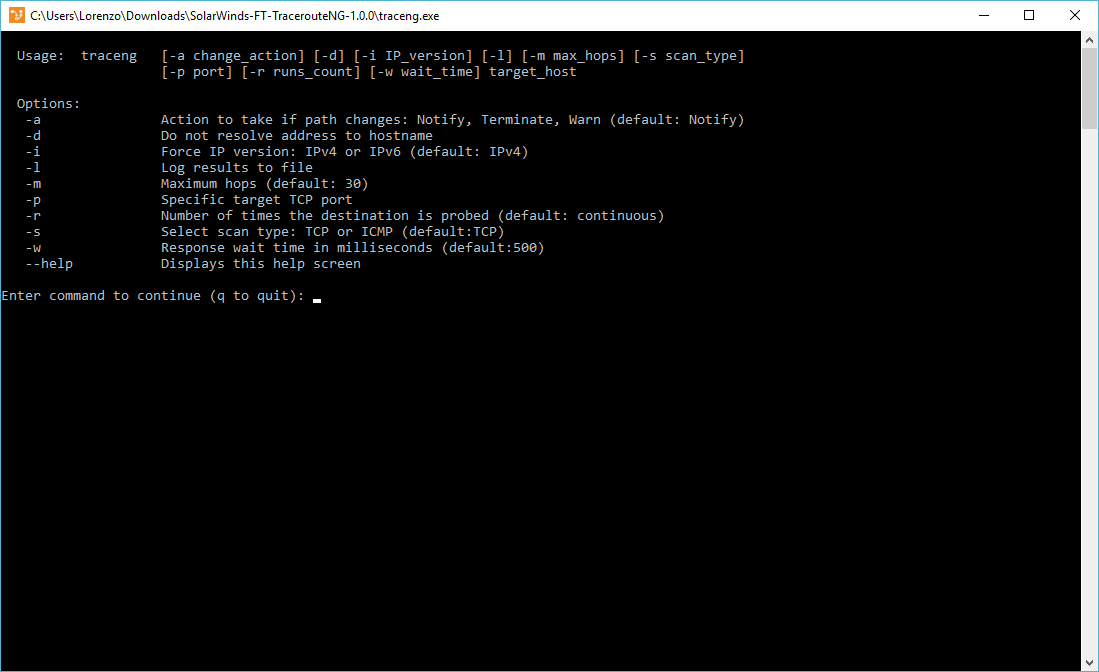

| Type of disk | Hard Error Rate (bit) | Hard Error Rate (Byte) | In Tbyte |

|---|---|---|---|

| Sata Consumer | 1.00E+14 | 1.25E+13 | 11 |

| Sata/Sas Middle Enterprise | 1.00E+15 | 1.25E+14 | 114 |

| Sas/Fc Enterprise | 1.00E+16 | 1.25E+15 | 1,137 |

| Sas Ssd Enterprise | 1.00E+17 | 1.25E+16 | 11,369 |

| Tape | 1.00E+19 | 1.25E+18 | 1,136,868 |

we used the following disks as reference:

Sata consumer: Seagate Desktop HDD or Western Digital Blue (1e14)

Sata/Sas Middle: Seagate NAS or Western Digital Red Pro (1e15)

Sas/Fc Enterprise: Western Digital RE (1e16)

Sas/Ssd Enterprise: Seagate 1200.2 Ssd (1e17)

Tape: Ibm Tape Storage (1e19)

When reading the table we can note how the commonly sold Sata disks have an Hard Error Rate, which is often described in the technical specifications as “Nonrecoverable Read Errors per Bits Read”, that is quite low: 1E14 bit. That means that a reading error is encountered approximately after 11 Tbyte of read data, and it’s not recoverable, thus blocking the sector and making it not accessible.

These disks are the traditional models found in desktop systems, usually available with Sata connections only and suited for a consumer use, not for a use in a workstation or enterprise sphere, where 11 Tbyte of read data before an hardware error are really a few and a source of problems.

Those models that have a more advanced planning and realization have an Error Rate higher in terms of magnitude, thus allowing to read data in excess of 100 Tbyte before encountering an error. These models are available with both Sata and Sas connections, showing how the lone communication channel is not what makes disks more or less resilient.

Raid: a resource or a problem?

More advanced models, equipped with only Sas or Fiber Channel connections, have an Hard Error Rate that is even higher: more than 1.000 Tbyte of read data before an hadware error kicks in. The difference in the connections is relevant only according to the scenario of usage. Nothing is against the creation of Sata disks with these characteristics, however their use in consumer system is oversized.

Manufacturers prefer to differentiate their model according to the connection type, offering disks with high resistance to failures in systems that really require it and are equipped with more advanced and dedicated controllers and management cards.

Beyond these products we enter in the Enterprise SSD realm, with values really high (more than 10.000 Tbyte) and in the tape archiving systems sphere, with values a hundred times higher.

Let’s make an example, talking about RAID. Let’s assume a simple chain composed of 4 6 Tbyte Sata disks in RAID 5 mode, a common solution that makes available a total space of 18 Tbyte and the protection against the failure of a disk. Should one of the disks fail, the system would keep on running until the substitution of the failed disk with a new one. At this point, the rebuilding procedure of the whole chain consists in the reading of the whole filesystem of the 3 remaining disks and the writing of the parity data on the new one. However, if we are using Consumer-tier disks, the complete reading of the other three disks -if the system is filled or close to be filled- will jump to 6 Tbyte per disk, a value which is perilously close to that 10 Tbyte value that this category of disks, on average, supports.

That means, having to perform this operation on three different disks, that the probability of an Hard Read Error during the rebuilding of the RAID gets really high. Errors can end up in another reading of the disks, thus starting a loopback that is hard to escape from.

If until a few years ago, when the maximum capacity of a disk was measured in Gbyte, the problem wasn’t consistent, nowadays it gets particularly relevant, with models so spacious, and reduces significantly the efficacy of a so widespread system like RAID.

8 Tbyte disks do exist as well as the risk of being read just once before encountering, in a statistically significant way, in an hardware error. Because of that, archiving typologies for professionals and SMBs should be constituted by disks with a superior resilience, that can withstand values tens or hundreds times higher, thus offering a partial shelter from these unfortunate scenarios.

Enterprise disks have an error ratio way lower than consumer models (we’ve carefully avoided to roughly distinguish between Sata and Sas, as the connection used is a secondary aspect up to this point), and the must be preferred when data integrity is an absolute priority on a personal and business perspective.

Moreover, if working with disks with an high capacity -say 4, 6 or 8 Tbyte- it’s favorable to think about what kind of protection level technologies like RAID 5 can offer, considering not only the risk of a loss of data but also the time needed to rebuild a disk in case of a failure. Indeed, during this time span there’s a performances decrease and no protection at all against a second failure. It’s better to consider more reliable solutions like RAID 10 or RAID 6 that withstand a failure of more disks, or another file system (ZFS) or alternative architectures (erasure coding).

Data integrity and silent corruption

The importance of data integrity is proportional to their value. Be it photos of your sons (and value is measured in sentimental terms) or key projects of your company (and value is measured with a more tangible term), data often has a very important value. Losing stored data because of an hardware error or their corruption involves high costs in terms of recovery and time (when possible). To solve possible issue it’s important to adopt all the possible strategies to prevent this situation by using more resources proportionally to the value we give to that data.

In addition to the hardware errors we’ve already talked about, there are other chances of error, more subtle and slyer, and harder to handle. The first one is called Silent Data Corruption.

Unlike hardware errors, which are immediately evident and shown by the impossibility of the system to physically access the device, there are some errors that show up only after time, when you try to access the faulty file. Let’s say we want to write the word “file” and, during the communication process of data to the disk, the word “fiel” is written instead. It’s a communication error, a series of bit that, for some reason, changed order thus modifying the meaning of the whole word. Only when data is read we acknowledge that it’s corrupted, bringing big problems in restoring what we originally created.

How to prevent such situations? Fundamentally there are two ways: one is based on communication protocols and the other on disk file system.

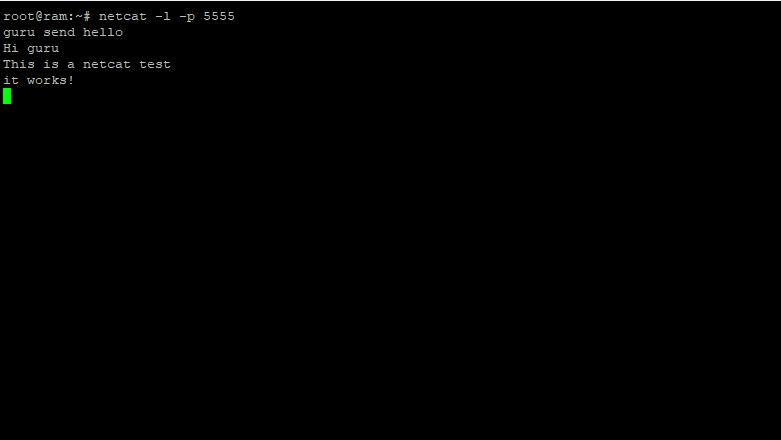

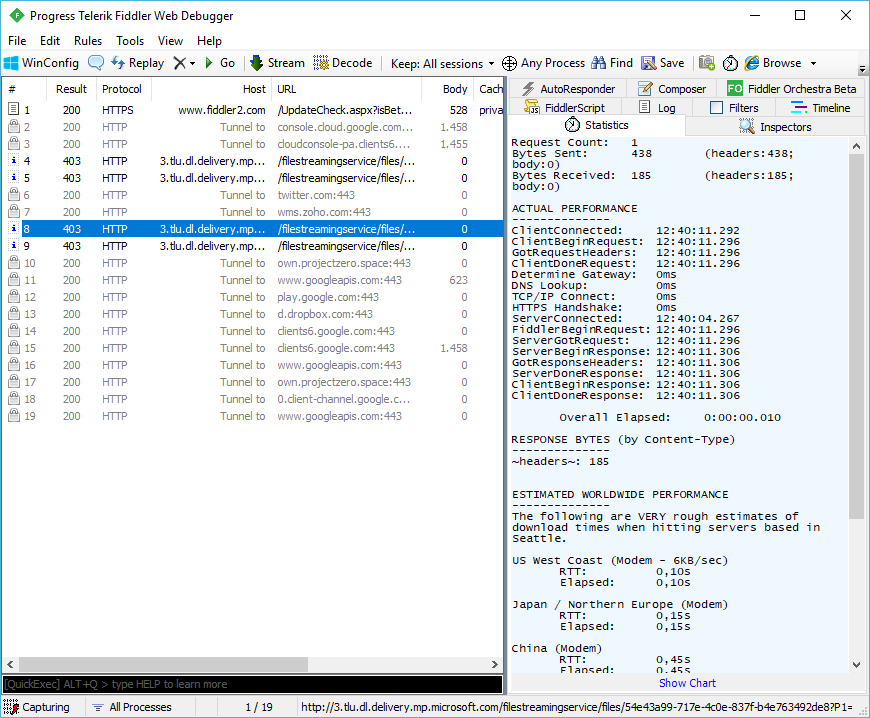

Prevention: communication protocols

Disk is not the only delicate point in data integrity. Data must be sent from the system controller to the disk’s by means of a communication channel that -because of its own electronic nature- is subject to errors. If the system sends a string of bits of a certain type and the disk controller receives a different string, the problem is in the channel and the disk will present corrupted data without any fault ascribable to it.

Sata connection, studied to privilege the simplicity of connection and management, implements only a handful of expedients to solve these problems, whereas an Enterprise-level solution implements several expedients. There is an indicator similar to the previous one for communication channels too, called SDC (Silent Data Corruption) that evaluates, at first glance, the reliability of a connection. The common Sata standard has an SDC that spans from 1E15 to 1E17, while the more advanced Sas or Fiber Channel reach 1E19 or 1E21.

| Number of errors per year with average transfer speed of: | |||||

|---|---|---|---|---|---|

| Silent Data Corruption Rate (bit) | 10 MB/s | 100 MB/s | 500 MB/s | 1 GB/s | |

| Sata Standard | 1000000000000000 | 2.6 | 26.5 | 132.3 | 264.5 |

| Sata 3 | 100000000000000000 | 0 | 0.3 | 1.3 | 2.6 |

| Sas | 9223372036854775807 | 0 | 0 | 0 | 0 |

| FC | 1.0E+21 | 0 | 0 | 0 | 0 |

It’s trivial to understand with these values that the use of Sata connections in an Enterprise-level environment will entail, almost for sure, a series of silent data errors, thus corrupting them even before being written on disk.

Sas on the other hand uses a specific protocol capable of exchanging information and registration about data parity that enormously limitates this kind of errors.

In practice, each data exchange is coupled with a final checksum that guarantees that the transmitted package is the same as the one sent. In this case too if errors are multiple, there is an error probability, still with a ratio way lower than in the case of Sata. The connection via optical fiber, FB (Fiber Channel), is even better, using light instead of simple electric pulses, thus incrementing the resistance to interferences and, as a consequence, the strength of the connection.

Obviously if there are several disks in a storage system, data must be added up, suddenly finding yourself with the certainty that, soon, some silent data corruption error might happen.

File system as a cure

Even in the more advanced systems, irreversible reading errors can happen when using Enterprise disks with SAS or FC connections.

The values we stated previously are indeed an average and indicate the global resilience of products and communication channels. Unfortunately it can happen that we stumble upon some kind of problems just after a few Tbyte read, even if it’s statistically way less probably on superior tier disks than on common Sata model and on fiber connections than on Sata. Those who want better guarantees on a reliability perspective can work on another operating aspect, that is, the file system being used by disks.

Let’s start with a clarification: doing work on the file system means to adopt a technique that allows to find errors in reading or writing a piece of data and try to fix them.

The identification of an error, especially if silent, is usually quite difficult. We need to use a file system that has the recognition of silent error amongst its features.

ZFS, originally developed by Sun, is one of the most appreciated file systems in the enterprise world thanks to its resilience to errors. Called ZFS because of the enormous 128-bit addressing space (ZFS stands for Zettabyte File System), it is characterized by some aspects in data security that are very interesting.

This file system calculates the 256-bit checksum of each block written on disk and stores it inside the pointer of that specific block. Inside of the pointer, the checksum of the block’s checksum is written too. When a block is read, its checksum is matched with the one previously stored. If they match, then data is considered valid and treated normally, otherwise the whole block is considered faulty and extracted again in the Raid mode selected (Mirroring or higher Raid). Thanks to that particularity, ZFS can identify a good percentage of corrupted data because of the transmission channel or a wrong writing of each byte. The probability that on the second disk, or the others in the chain, that piece of data has arrived in a wrong manner because of the channels is indeed quite low.

In this case Raid and file system act together to solve partially the problems of the Sata connection, still applying the same improvements to Sas and Fiber Channel connection, thus making these interfaces even safer. ZFS doesn’t require a disk controller with Raid capabilities, instead it implements it on a software basis with a system that is even stronger under certain aspects. ZFS still has some features that are found in a Raid system as well (for instance, Mirror is equivalent to Raid1, Raid-Z to Raid 5): we’ll talk about it in details in an article in the upcoming issues.

Concluding, we face a situation that is quite clear: guaranteeing data integrity requires expedients on different fronts. Enterprise level disks safeguard from hardware errors, thanks to their ratio way high than in common consumer disks. The type of connection plays an important role, as Sata is less resilient than Sas or Fiber Channel. These two expedients alone are a good dose of protection, while the adoption of an advanced file system allows to be protected from probems like silent data corruption.