Everybody is talking about Hyperconvergence and Software Defined Datacenter (SDDC), but what are the pros and cons of these architectures? We’ve tried for you the solution proposed by VMware for storage hyperconvergence: Virtual SAN 6.1, announced during the recent VMworld. Let’s take a look at its peaks and troughs.

The basic concept is quite simple: after spending so much money to make your infrastructure reliable, maybe with the purchase of 2 or more higher tier hosts, why can’t directly leverage them to manage storage too in a redundant way? Perhaps without spending several thousands of euros in additional dedicated storage? Behind this simple thought lies the core of the advantages brought by hyperconvergence in the storage sphere. Many solutions are available on the market, but VMware’s one has an hidden beauty: a system designed and developed by the very same people who designed the hypervisor.

Virtual SAN has been introduced with vSphere 5.5 and has been enhanced with vsphere 6.0. The recent 6.1 release, which is here reviewed, has been re-designed profoundly to enhance the performances and satisfy the needs displayed by the users of the previous releases.

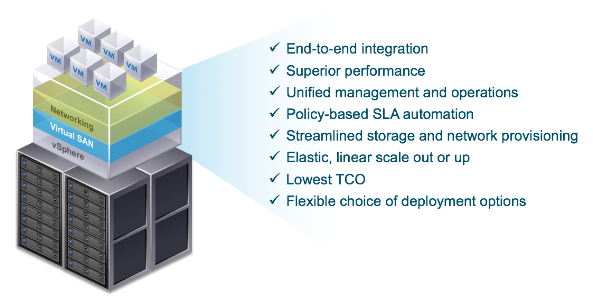

All the computational, storage and networking resources used by VSAN are taken by the single nodes that compose the architecture. Management is unified and scalability is particularly elastic as the addition of new nodes makes the new resources available in a dynamic and automatic way.

VMware hyperconvergent infrastructure

The hyperconvergent storage solutions available on the market are:

- Nutanix Acropolis

- SimpliVity OmniCube

- Maxta Storage Platform

- Scale HC3

- Nimboxx VDI Appliance

- Pivot3 vSTAC

- HP Converged System

VMware Virtual SAN is not the only hyperconvergent storage solution available on the market as you can see from the above list; different solutions can be distinguished in preconfigured hardware appliance, inclusive of server, storage and network, and in software appliance to be installed on virtualized hosts, on top of them a distributed storage is created.

However, a comparison between Virtual SAN and the other concurrent solution cannot be made because the product by VMware has the great advantage of being completely integrated with vSphere, which is a feature impossible to be implemented for third party storage. That doesn’t mean a priori that VSAN is to be preferred to other solutions: if, anyway, we deal with a VMware virtual infrastructure, the advantages are self-evident as VSAN is a feature that can be activated inside a cluster: it is included in the kernel of the ESXi hosts.

How it works

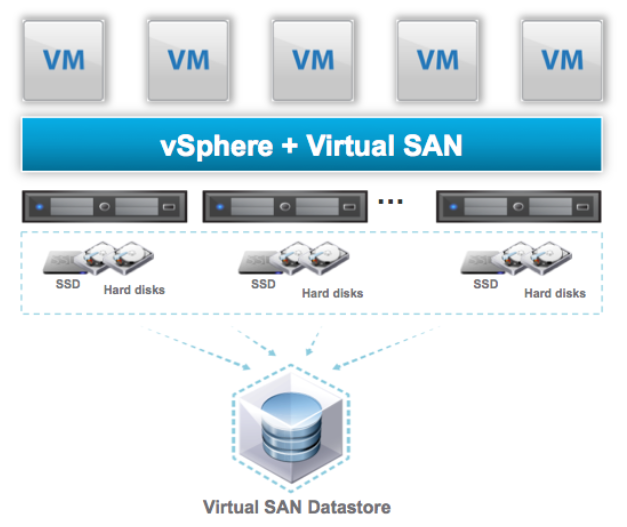

Virtual SAN allow to create a shared datastore leveraging local memorization devices (hard disks, SSD, flash) present inside the hosts. A Virtual SAN datastore can be used by other VMware services such as vSphere HA, vMotion, DRS, etc, that require a shared storage to operate properly.

VSAN datastore distributed on all the hosts of the cluster

Resilience, that is, the resistance to failures, is managed via software with VSAN: no complex creation and management of RAID arrays is required as the hosts don’t require any hardware RAID configuration. VSAN adopts a concept of network RAID in order to protect data against disks and hosts failures. Being the protection implemented by means of a software, VSAN monitors the whole infrastructure so that in the same instant at least two copies of the same object exist in at least two different hosts.

Protection modes of data in a VSAN datastore are defined with policies assigned to Virtual Machines: they can be configured through the vSphere Web Client and determine attributes like disk capacity, performance and availability. The objects that compose a VM (in particular .vmdk virtual disks) are then automatically distributed in the datastore guaranteeing the performances and availabilities stated in the assigned policy.

Virtual SAN shares several traits with traditional storage units, but its behaviour is different, in particular:

-

No network storage is needed to store VMs, therefore standard storage protocols like iSCSI and FCP are not used.

-

No traditional storage volumes based on LUNs or NFS shares are needed.

-

The figure of a storage administrator is not required as vSphere admins can manage with full autonomy a VSAN datastore.

The requirements

A Virtual SAN based vSphere infrastructure can be realised by means of preconfigured solutions, called Virtual SAN Ready Node, provided by VMware’s partners, or by means of a VSAN cluster, after matching the hardware used against the list of VMware's Virtual SAN Compatibility Guide (VCG).

Virtual SAN 6.1 requires a VMware vCenter Server 6.0 and at least three ESXi 6.0 hosts. Both the Windows vCenter instance and the Virtual Appliance (vCSA) are supported. Management is exclusively with the vSphere Web Client. Each host must have at least one 1Gb Ethernet interface dedicated to the VSAN network, however VMware recommend a 10GbE network.

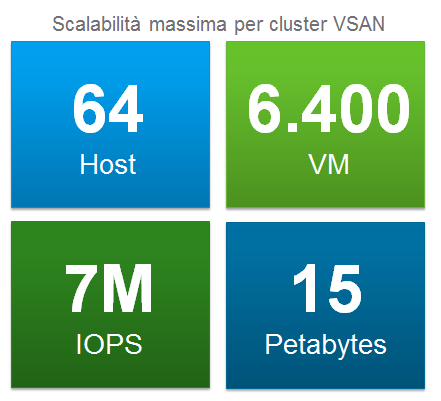

As hosts don’t require an hardware RAID configuration, their disk controller can be a SAS or SATA HBA (Host Bus Adapter). If you want to use a RAID controller, set it in passthrough mode or RAID 0. A VSAN instance supports one single cluster, but a VSAN cluster can be composed of up to 64 hosts.

The setup

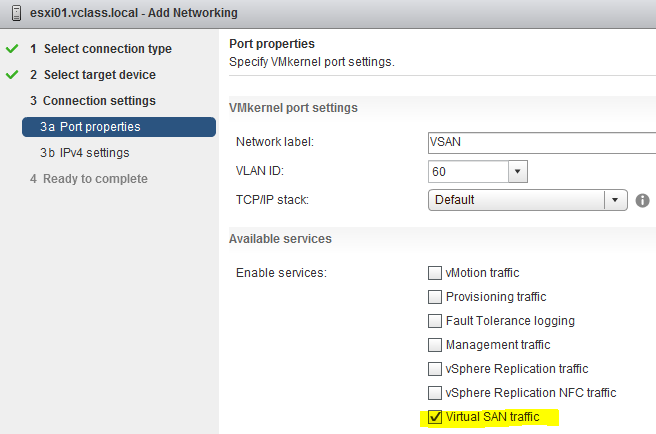

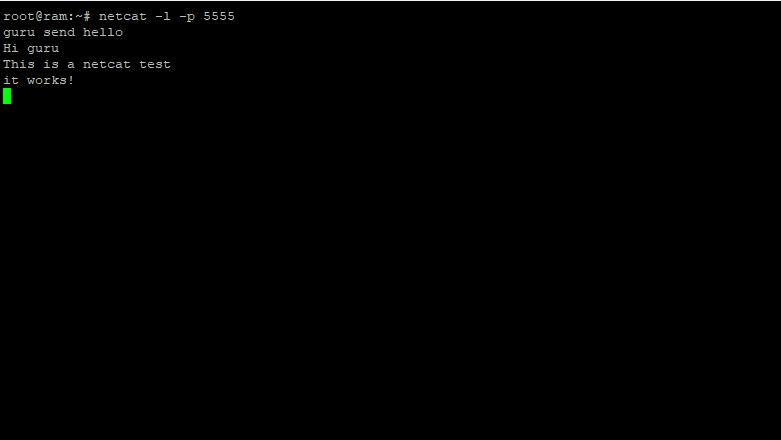

VSAN is simply a feature that can be activated inside a cluster and already included in the vSphere kernel; the first step is to create a VMkernel interface (NIC) enabling the VSAN traffic on each host of the cluster.

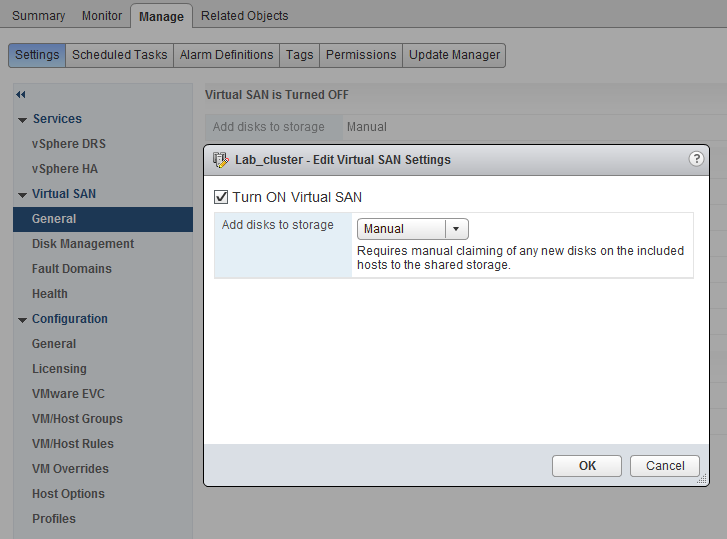

Once this prerequisite is satisfied, go on enabling the Virtual SAN service inside of a host cluster, choosing the tab path Manage > Settings > Virtual SAN.

The initial configuration asks for an automatic or manual mode to add disks. The former adds all the empty devices present on the hosts of the cluster, the latter lets the administrator to add devices with repeating steps.

Memorisation devices are grouped into what is called disk group; each host can have up to 5 disk group, and each disk group can host up to 7 devices, which makes a total of 35 disks managed by each host. Each disk group must have a flash device for caching operation (cache tier) and one more more devices for data storing (capacity tier). VSAN organizes devices in predefined groups if using the automatic mode.

Enabling the VSAN service inside a cluster involves the creation of a VSAN datastore called vsanDatastore, shared among the hosts and, as a consequence, suitable for advanced services such as vSphere High Availability, vSphere Distributed Resource Scheduler and vSphere vMotion.

Data protection

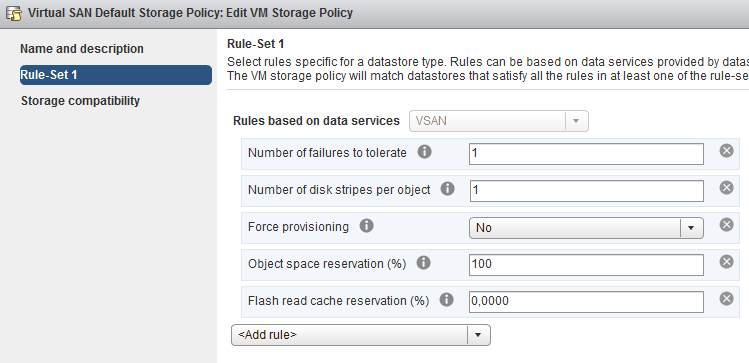

As stated at the beginning of the article, protection against failures is managed via software with Storage Policies to be assigned to VMs. Virtual SAN requires that every VM placed in the VSAN datastore should be tagged with at least one policy, so it creates one by default that includes tolerance to failures (any host failure, storing device or network element), one replication for each object (disk stripe per object) and Thin Provisioning mode for VMs’ disks. To manage policies, from the starting page of the vSphere Web Client go to Policies and Profiles > VM Storage Policies. To create a new policy, click on the icon “Create a New VM Storage Policy”, insert a name and a description, and set the rules. The following screenshot shows the rules set in the default policy:

VSAN architecture

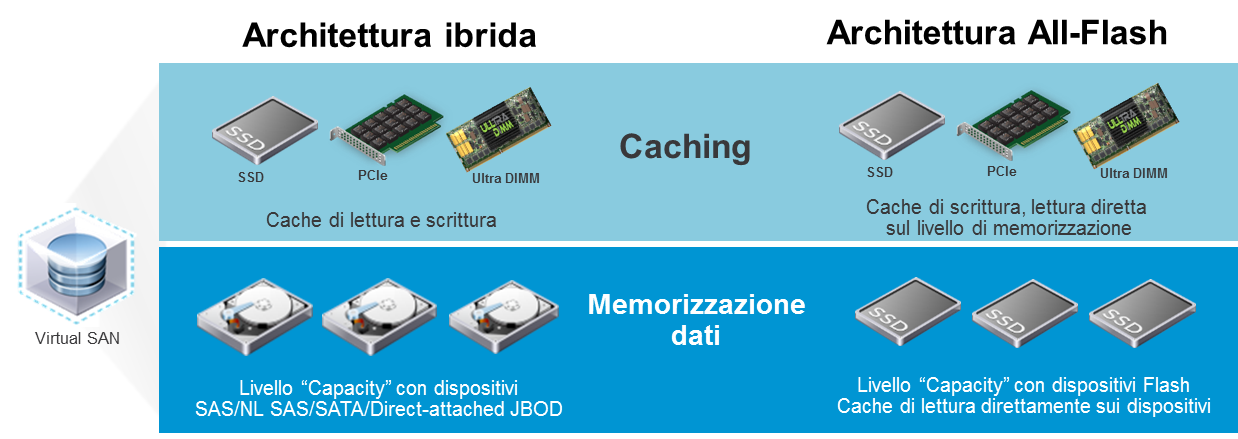

Two different architectures are concerned with the choice of the device for a VSAN datastore:

-

Hybrid architecture - it implies the use of Flash devices (SSD on SAS/SATA channels and PCI-e) for caching and magnetic disks for data memorization. In terms of space available, flash devices don’t contribute at all as they are used exclusively for caching (reading cache and writing buffering).

-

All-Flash architecture - it implies the use of only Flash memories (SSD and PCI-e) to offer elevated consistency and performance levels. It’s a new feature with respect to VSAN 5.5, when the only architecture allowed was the hybrid one. An All-Flash VSAN cluster uses only one Flash device as reading buffer, the remaining devices are used to store data.

New features introduced with VSAN 6.1

VMware, with Virtual SAN 6.1, pushed its hyperconvergent solution towards very high levels. Numbers speak clear: storage capacity up to 14PB (64 nodes * 35 disks * 6TB), continuous availability with RPO equal to zero, 2,5M IOPS with the hybrid architecture and 7M IOPS with the All-Flash architecture allow to support even the most demanding storage environmen

New features are naturally not limited to lone numbers. Following you can find a description of the new features that align VSAN 6.1 with the other Enterprise SDS solutions.

New hardware options

-

Support to DIMM based SSD units, with connection of the memory channel to ULLtraDIMM SSD flash storages installed on DIMM slots that guarantee a writing latency exceptionally low (<5 us).

-

Support to NVMe SSD units, which use the new communication protocol “Non Volatile Memory Express”.

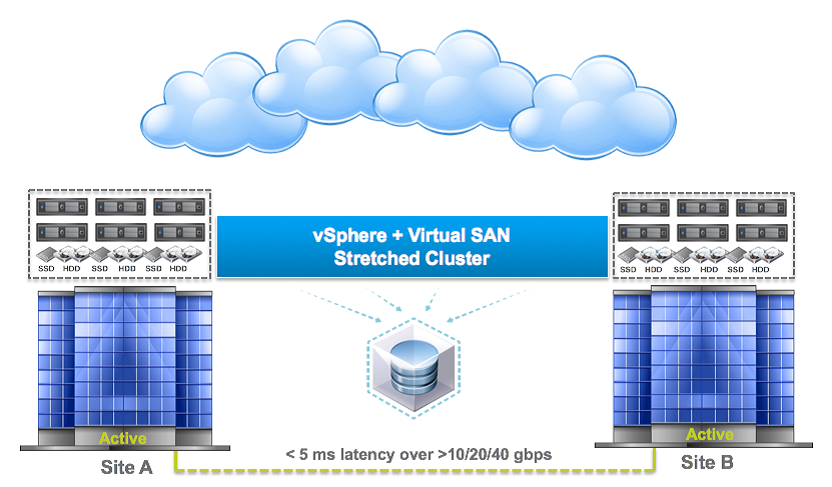

Virtual SAN Stretched Clusters, a new feature that allows the realization of an extended cluster on two sites geographically separated. Data are replicated in a synchronous way between the two sites; in case of failure of a whole site, down time is near zero and data loss is absent. This solution can also be integrated with VMware vMotion and vSphere DRS to balance the workload of the two sites, while Virtual SAN guarantees better performances as the writing and reading operation, on a per VM basis, are performed locally. The minimum connection bandwidth between the two sites must be at least 10Gbit/s.

Logical scheme of Virtual SAN Stretched Cluster

Virtual SAN ROBO (Remote Office / Branch Office), a VSAN cluster solution on just two hosts. It’s the ideal solution for small businesses and remote branches that still want to take advantage of high availability without facing the complexities brought by an enterprise storage system. The ROBO cluster can be managed centrally by a vCenter instance located at the “headquarters”.

Logical scheme of Virtual SAN ROBO

New integrated management and control features: it’s now possible to integrate VSAN with vSphere Replication, with RPO (Recovery Point Objective) that spans from 5min to 24hrs. The integration with vRealize Operations is possible too, thanks to a set of functions that speeds up the resolution of problem and give a global visibility to the VSAN cluster. Lastly, the new Virtual SAN Health Check plugin for the vSphere Web Client allows to check the general state of VSAN: verify the compatibility of the hardware used, monitor the update state of drivers and firmware and it manages the execution of diagnostic tests on the network and coherence controls of the configurations inside the cluster.

Conclusions

VMware is dedicating and ever growing attention towards Virtual SAN. The improvements in the number about capacity and availability, together with the new all-flash architecture, allow to satisfy every need of virtualized applications, even on a business-critical level.

Virtual SAN can certainly help several businesses in the transition of storage from traditional virtual environments to Software-Defined Datacenter but there’s a price in financial terms. VMware Virtual SAN ha a list price of 2.495$ per CPU; the new All-Flash architecture is available as an ad-on with an additional cost of 1.495$ per CPU. With these prices, a VSAN distributed on three double-processor hosts has a price of 14.970$ (2.495$/CPU * 6 CPU): small businesses must carefully ponder upon such investments.