Here’s 7 simple rules to follow in order to guarantee integrity of data, never forgetting good sense.

Actually, it’s like convincing someone of the usefulness of warm water - every professional of company should always have an updated and complete backup that provides an easy restore - yet unfortunately every time I talk with an IT responsible, consultant or sysadmin I discover, with my great surprise, that some basic concepts are misunderstood or ???, if not even unused.

Let’s list the 7 requisites of a good backup system.

1: “2 is better than 1”: an additional copy isn’t a pity

Don’t ever think that a single copy on an external HD, NAS or resource is enough. Failures are always around the corner and you’ll get to face them in the worst moments. Therefore duplicate your backups on two different supports at least, even if the main one seems to be adequately reliable. You don’t have to copy everything: if you make, say, a complete backup of each computer, probably copying terabytes of data is a waste. Backing up critical data (ie documents and emails) however must be done on (at least) two different supports, both of them reliable.

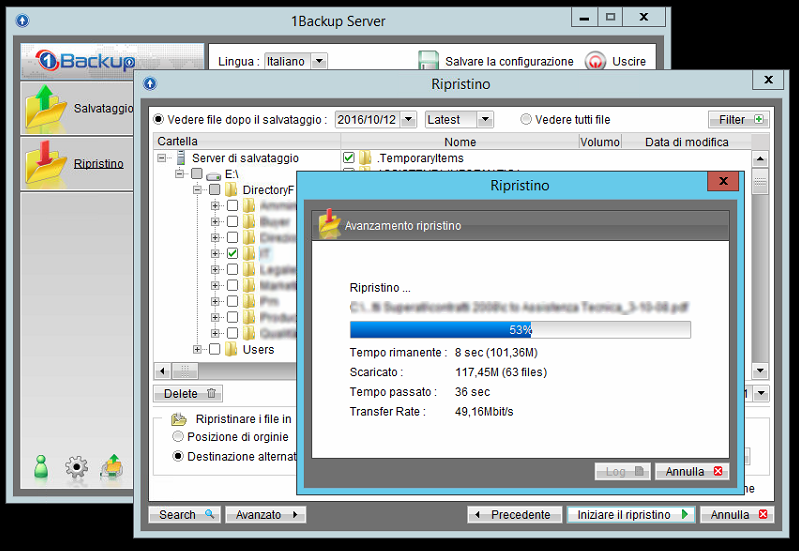

2: Test your backups

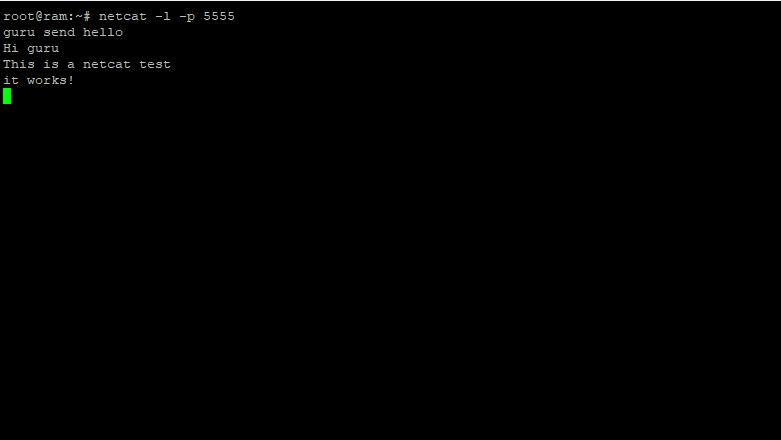

Most times a backup is good, it’s when restoring it that problems arise, and only when in the need you’ll notice errors, missing files or other problems related to the material saved. Therefore it’s of uttermost importance to test backups by restoring them in a testing environment. Not all technologies require this check: for instance if you are making a pure copy of a folder it’s unlikely that something goes wrong, at least if the software can alert you in case of reading/writing errors (ie multiple accesses to a file at the same time).

Things get harder when using software that elaborate data creating a proprietary-formatted file. In this case the only way to test it is to restore it, indeed. Some software offer a testing system that it’s quite good to start with, but only a real, complete restore will make you sure about the result.

The chances of a backup being unusable further grows when it’s a “hot saving”, eg a powered on Virtual Machine running on a virtualization system, or a system image with Windows running. A backup of this kind might not be consistent, in particular if you don’t use dedicated technologies like Microsoft VSS (Windows) and if you don’t make a backup compatible with some applications, like databases, SharePoint, Exchange and similar products. In these cases, a periodic restore is fundamental. More sophisticated tools like Veeam and HPE VM Explorer allows you to restore VMs in a virtual environment in a completely automated manner, to launch scripts at the end of the process and to capture screenshots that can help to understand whether the restore has been successful or not. It’s not overkill, it’s a best practice that gives certainty about the result.

3: Use the right technologies

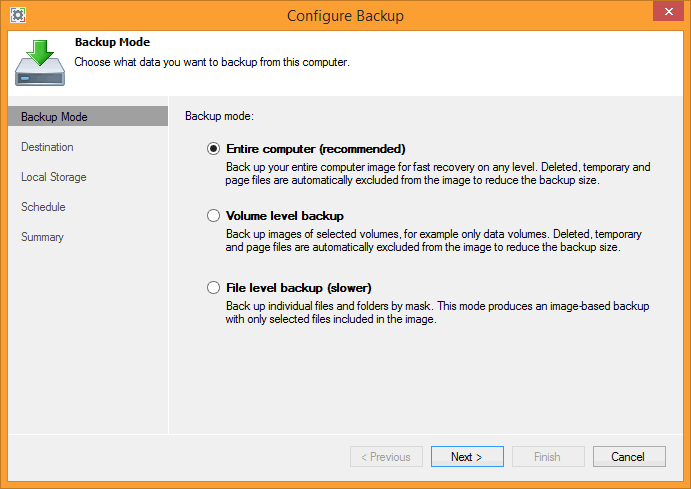

With regards to the previous point, it’s quite evident that backing up the complete image of a PC or VM isn’t always the best choice. First because it takes a large space to be stored on and lots of time to be restored, and secondly because it doesn’t gives all the needed guarantees of a precise functioning.

In most cases a copy of the database or critical files of the application you’re using allows to save plenty of space and to perform backups more frequently, thus having a greater number of version. However, the best technology to use varies according to the object of the backup.

If you are backing up the content of a file server you will experience less problems by adopting a periodic synchronization policy with versioning. So you will be able to retrieve a single file without using third-party tools to extract data.

If you are backing up the content of a database, you have to use a script compatible with the database server being in use. You can’t “hot copy” file as they can get corrupted and unusefull. As an alternative, you can use a commercial software that supports databases and the version of the database server in use.

If you are backing up SharePoint, you can also use its integrated backup tool, which is secure and can save the state of the whole system. This backup can also be scripted with ease and be performed as an incremental or complete backup.

Naturally with this script you can backup the VMs it’s running on and, if possible, another backup with a specific software that offers an easy incremental restore.

If you’re using Exchange, then it’s time to move over to an online email system. Jokes aside, if you use it, then purchase a commercial software for backup and granular restore, and be sure to read very carefully the documentation and respect every best practice. Moreover, leverage as much as you can the features in Exchange for the retrieval of deleted email, perhaps extend the retention period beyond 30 days, too.

4: Granularity matters

This point goes together with the previous. When you will be asked to retrieve some files from the backup, you’ll be likely not willing and having to restore the whole backup. Perhaps you just need a single email or file: you must plan a restore strategy according to how you manage backups and to the technologies being used. What matters, in the end, is to be prepared in every circumstance.

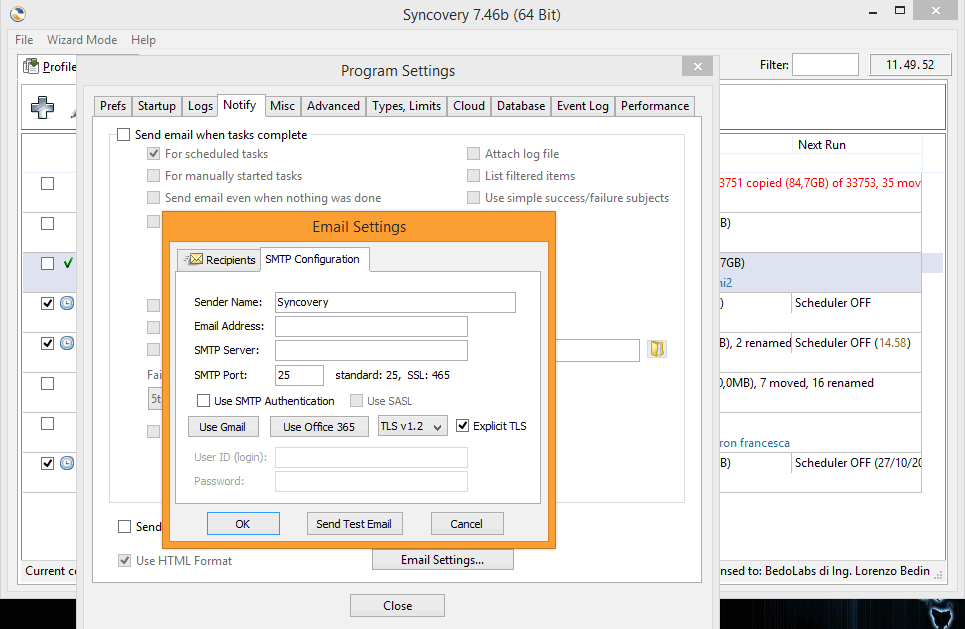

5: Notifications: yes, but with the proper clarity

Some backup software can send you each day an email with the complete log of all backup activities of the day. As long as this feature can make you safe against the loss of logs inside the server, surely it won’t help you to understand what’s going on, unless you’ve enough time each day to open logs, extract the content and read each details, taking care of each error.

A good backup software will send you a clear and readable report that let’s you understand if things are bad or good just by reading the object. The text should include details of files, VMs, databases, etc.., that have errors.

With the least effort, in this way you can very what’s went wrong, and also perhaps postpone the check if the error is about a low importance file.

The report should also be sent if there are no files to backup or the backup software isn’t running for some reason. Another problem that might raise is the backup software that stops sending emails because it’s not working anymore, because of a network problem, a new configuration, the SMTP server’s password, or because another reason. You must have a system that checks it doesn’t happen, otherwise you can’t monitor the state of backups. If you receive a single email each day you might notice the lack of such email, but what if you receive tens of emails from all your clients? Chances are that you won’t notice lacks of reports.

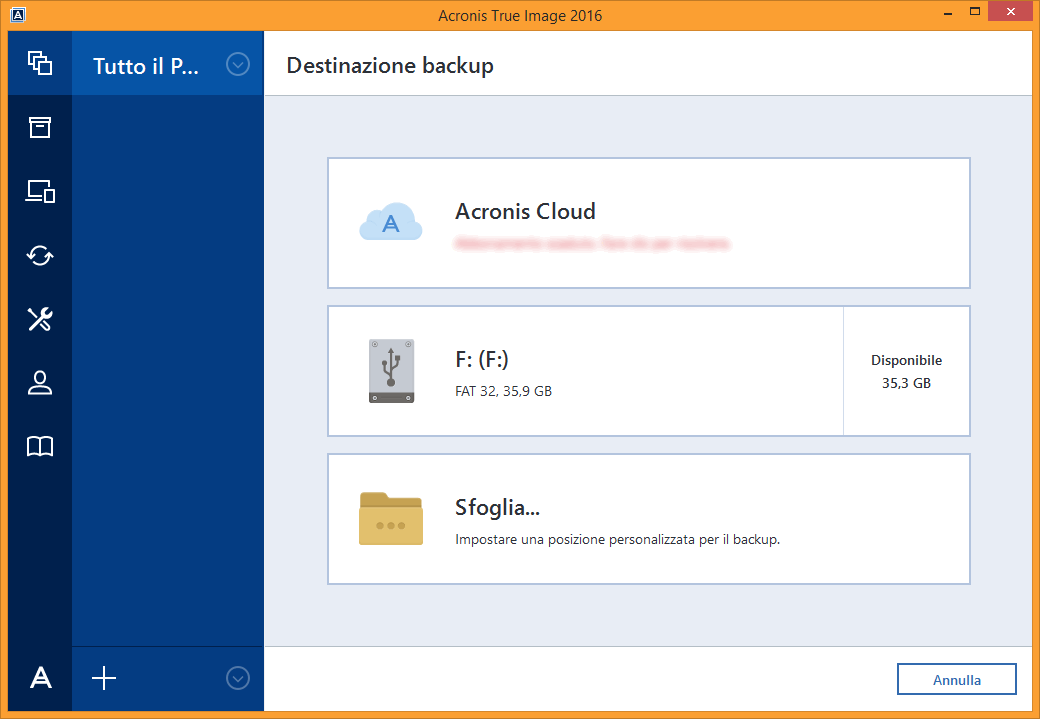

6: The intelligent use of Cloud

If you’ve pondered about a Disaster Recovery plan, naturally you’ll have thought about copying data outside your company in another office or into the Cloud.

When copying files in a remote place you could be tempted to copy everything it’s being copied on a local NAS too. But that’s an approach we don’t advise, especially if connectivity is limited. It’s better to use a backup service that supports the initial transfer of data by means of hard disks and only then activate the incremental backup feature.

If you’ve pondered about a Disaster Recovery plan, naturally you’ll have thought about copying data outside your company in another office or into the Cloud.

When copying files in a remote place you could be tempted to copy everything it’s being copied on a local NAS too. But that’s an approach we don’t advise, especially if connectivity is limited. It’s better to use a backup service that supports the initial transfer of data by means of hard disks and only then activate the incremental backup feature.

7: Don’t forget about Ransomware

Ransomware can cipher backups too, therefore any backup support must be protected against a possible attack, otherwise backups themselves lose their efficiency.

Clearly -at least up to now- the Cloud destination is intrinsically protected, but it’s important to limit the access to backup within the local network too, so that the chances of acts of vandalism by part of shady employees of collaborators lower. Remember about these potential threats when planning access permissions to files and folders.